3 Exciting Ways Data Engineers Are Changing the World

When we talk about exciting innovations like self driving cars, or ask Amazon’s Alexa to…

Complex data lakes process thousands of events an hour, and even a minor schema change can trigger major pipeline failures. Medallion architecture helps organize this flow into Bronze, Silver, and Gold layers. But when upstream schemas shift unexpectedly, the model can still break down.

A new field, type mismatch, or column rename in the Bronze layer can break Spark jobs, block dashboards, and disrupt machine learning features. One study on data pipeline quality found incorrect data types caused 33% of failures, making schema drift the leading root cause.

Apache Iceberg helps solve this problem by tracking schema changes, evolving tables without rewrites, and supporting multiple engines from a single format. This blog looks at why Iceberg fits medallion data architecture, how it compares to other formats, and how to test it safely in your stack.

Medallion architecture is a design pattern that separates lakehouse data into three purposeful tiers:

The pattern gives teams a clear path from ingestion to insight. Each layer builds on the one before it. This enables reprocessing and auditability without compromising final outputs. It creates clarity, enforces separation of concerns, and supports parallel work across ingestion, transformation, and analytics.

But as data systems grow more complex and dynamic, there is a growing need for an agile medallion architecture that adapts to change without breaking downstream workflows.

Upstream data changes often and without warning. A new field appears in an API, a sensor sends a different data type, or a CSV header gets renamed. These changes usually land in the Bronze layer first. If not handled correctly, they can cause type mismatches or missing fields in downstream jobs.

Because medallion architecture builds one layer on top of another, schema drift in Bronze can affect every downstream stage. That impact often shows up in ways that are hard to detect until something breaks.

In a medallion architecture, each layer depends on the one before it. When Bronze data changes unexpectedly, the issue can carry into Silver, where transformations rely on consistent schemas. Spark jobs might fail if the types no longer align. Gold outputs can return incomplete or incorrect results without obvious signs of failure.

Fixing these issues often requires manual intervention. Pipelines may need to be paused, schemas updated, and data reprocessed to restore consistency. This is because most pipelines are tightly coupled to the structure they expect. Transformations in Silver often reference specific column names and data types. When those assumptions no longer hold, logic breaks and results become unreliable.

Engineers must step in to isolate the root cause, revise parsing logic, and sometimes reload historical data to maintain consistency. These steps take time and coordination. One team might fix schema handling in Bronze while another reruns jobs or repairs dashboards in Gold.

In fast-moving environments, these delays increase cloud costs, push back deadlines, and reduce trust in the data.

Apache Iceberg is an open-source table format for data lakes that separates metadata from data files to improve scalability and consistency. It supports ACID transactions, versioned schema changes, hidden partitioning, and time-travel queries, so you can audit, query, and roll back data with control and clarity.

Since it works across engines like Apache Spark, Flink, Trino, Hive, and Snowflake, Iceberg helps you keep data consistent, even in mixed compute environments. It’s built for scale and reliability in modern lakehouse architecture, especially when your pipelines need to evolve without breaking downstream workflows.

Apache Iceberg reduces the impact of schema drift by isolating schema changes, maintaining version history, and enforcing consistency through metadata. Its design helps teams absorb upstream changes without triggering downstream failures. Here’s how:

Apache Iceberg manages schema evolution through metadata rather than file rewrites. This helps maintain consistency across engines and layers, reducing the chances that upstream changes will disrupt downstream pipelines.

Choosing a table format shapes how well a medallion lake can absorb change and serve many engines. Below, we highlight where Iceberg, Delta Lake, and Hudi differ in areas that matter most for Bronze, Silver, and Gold layers.

| Capability | Apache Iceberg | Delta Lake | Apache Hudi |

| Schema evolution | Full support. Add, rename, drop, and promote columns without rewriting files. Unique column IDs keep queries stable when names change. | Partial support. Adds and simple type changes work, but many operations need table recreation or explicit flags. | Partial support. Adds allowed; drops and renames rely on an experimental “evolution on read” mode. |

| Partition handling | Hidden partition transforms store partition logic in metadata instead of file paths. This lets teams switch from daily to monthly keys without rewriting any data. | Static Hive-style partitions. Changing keys usually means migrating or recreating the table. | Static partitions. Partition keys fixed at creation; altering them triggers full rewrites. |

| Cross-engine support | Read and write from Spark, Flink, Trino, Hive, Snowflake, and cloud services such as Athena and BigQuery. | Spark-centric with growing connectors through delta-rs and Delta Standalone. Best feature coverage inside Databricks. | Spark and Flink for write paths, Hive and Trino readers. Coverage outside these engines is limited. |

All three formats provide ACID transactions and time-travel, but Iceberg offers the most flexible schema evolution, partition agility, and multi-engine reach. These traits make it well-suited for medallion architectures that must handle frequent drift and serve diverse query platforms.

Tip: Teams that use Spark on Databricks can map these Iceberg capabilities to their ETL flows.

Apache Iceberg fits the Medallion model because it treats schema and storage as separate concerns and exposes rich metadata to every engine.

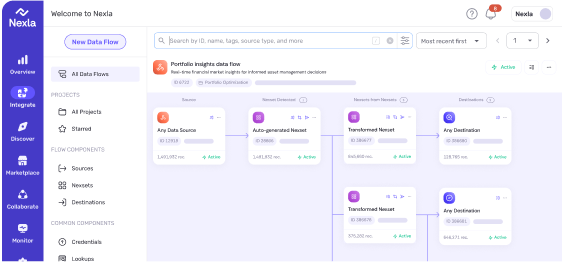

Schema-aware automation tools, such as Nexla, can monitor upstream sources for drift and apply compatible schema changes to Iceberg tables. This pairing keeps all three layers stable while data and requirements evolve.

Medallion architecture is designed for long-lived pipelines and evolving data. To stay reliable, tables must absorb schema changes, serve different query engines, and preserve history across storage systems. Apache Iceberg supports these needs through features that help reduce risk and simplify operations.

APIs and sensors rarely send the same structure for long. Fields appear, types change, and nesting can vary across events. These changes often land in the Bronze layer and might cause downstream Spark jobs to fail if column types or names shift without warning.

Iceberg manages these changes at the metadata level. New fields result in updated schema versions, not rewritten files. Because Iceberg tracks columns by ID rather than by name, queries in Silver and Gold layers can remain stable even as the schema evolves. This helps teams avoid reprocessing when upstream formats drift.

Teams often use different engines for different tasks, such as Spark for ETL, Trino for exploration, and Snowflake for reporting. Without a shared table format, this can lead to duplication, version mismatches, or delays in data availability.

Iceberg exposes tables through a consistent catalog interface and stores query-planning metadata that multiple engines can read. As a result, Spark jobs can write once and make data immediately available to Trino or Snowflake without creating copies. This reduces operational overhead and helps maintain consistency across tools.

Data retention policies, especially in regulated industries, require auditability and rollback for years of data. Storage needs and partitioning strategies also change as volumes grow or access patterns shift.

Iceberg supports time-travel queries through versioned snapshots. This allows teams to audit specific states, restore from errors, or validate historical results. Partition specs are stored in metadata and can be updated over time. For example, a table partitioned daily can be redefined to use monthly keys without rewriting files. This flexibility helps maintain long-term reliability while keeping costs and complexity low.

Nexla extends Iceberg’s table format with integration and productivity tooling that shortens build time and widens coverage across systems.

These platform capabilities help data teams deliver Medallion pipelines to production quickly. They keep performance consistent as volumes grow and make it easier to demonstrate governance with minimal overhead.

Apache Iceberg reduces fragility in layered lakehouse pipelines by treating schema, metadata, and storage as independent components. It supports gradual adoption, allowing teams to start with a single Bronze dataset and expand without rewriting pipelines or changing storage systems. Because Iceberg is compatible with Spark, Trino, Flink, and Snowflake, it fits into existing workflows while extending flexibility.

Nexla builds on this foundation by introducing automation, validation, and lineage features that align closely with Iceberg’s design. Nexsets tracks schema versions alongside data payloads, making it possible to roll back changes or audit data at any point in the pipeline. Inline validation blocks non-conforming records before they reach Iceberg tables. Job orchestration manages compute resources efficiently, scaling ingestion and transformation without manual intervention.

Together, this stack can give your data teams fine-grained control without sacrificing speed:

If your organization is working with frequently changing sources, diverse data consumers, and strict governance requirements, the combination of Apache Iceberg and Nexla provides you with a stable and scalable path forward. It can help you improve reliability across the stack without the need for a full replatform.

With a proven blueprint in hand, the next step is to measure real gains in uptime, auditability, and cost.

Move from theory to a working pilot and see how much time and compute your team can save.

When we talk about exciting innovations like self driving cars, or ask Amazon’s Alexa to…

CI (Continuous Integration) has enabled software engineering teams to collaborate better and leverage automation. Alongside…