Consider your team needs to run machine learning pipelines, real-time dashboards, and batch analytics, all on the same data. However, every engine you use requires duplicating datasets or building custom connectors.

Today’s analytics and AI workloads demand scalable and flexible data infrastructure. How can you achieve this without vendor lock-in or operational complexity? Apache Iceberg, a modern open table format, decouples storage from compute, empowering your team

to ingest, transform, andquery data across engines without lock-in.

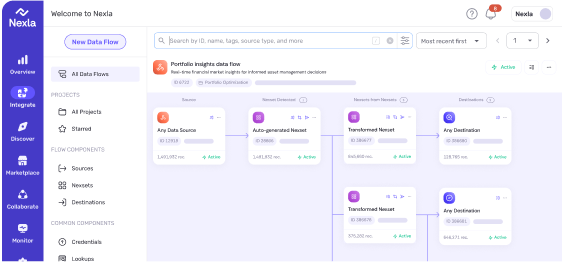

Yet, rolling out Iceberg pipelines presents complex challenges. Nexla solves this by offering instant, no-code Iceberg connectors, Change Data Capture (CDC) flows, schema evolution, and catalog support. This enables the delivery of production-ready Lakehouse pipelines at scale and speed.

In this guide, we will explore why Iceberg matters and how to use it effectively with Nexla.

Why Iceberg? Decoupling Storage from Engine

In a traditional data warehouse, data storage methods are closely linked to the processing engine. This unified design which means scaling one often requires scaling the other, even when it’s not needed. For instance, if you store 10 TB of data in a warehouse, you are also paying and maintaining compute resources to process all of it, even during off-peak hours, resulting in unnecessary compute costs.

The data lake system addresses this, using commodity object storage (such as Amazon S3) to hold vast amounts of raw data. However, data lakes also lack the critical management features of warehouses, which often result in unreliable data swamps–a poorly managed or disorganized data lake.

The data lakehouse closes this gap by providing a scalable and low-cost data lake with the reliability and performance of a data warehouse. Apache Iceberg is the key enabler of this architecture that decouples storage from the compute engine.

Iceberg keeps metadata separate from data files at its root, enabling multiple engines, such as Apache Spark, Trino, and even Snowflake, to access the same data seamlessly. This separation matters because metadata tasks (like snapshot management and partition pruning) can be performed without scanning large data, speeding up query planning, decreasing latency, and improving resource use. This also helps organizations to choose the best engine for each workload without duplicating datasets or worrying about compatibility issues.

This decoupling delivers several key benefits, including:

- Vendor Flexibility and Future-Proofing: With Iceberg, you are no longer locked into a single vendor’s ecosystem. You can switch compute engines as your needs change, or pricing models shift, all without undertaking a large data migration project. Your data assets stay independent and future-ready.

- Performance Gains: Iceberg’s hidden partitioning and metadata pruning capabilities accelerate queries by eliminating unnecessary file scans, leading to lower compute costs.

- Guaranteed Data Consistency (ACID Compliance): Iceberg brings atomic, consistent, isolated, and durable (ACID) transactions to the data lake. Every change to an Iceberg table is an atomic commit, whether a single-row insert or a massive batch update. This is achieved through optimistic concurrency, where changes create a new table metadata “snapshot.” A central catalog atomically swaps a pointer to make changes visible instantly to all readers. This prevents data corruption and consistency issues commonly found in older formats, such as Hive.

A powerful real-world example is Salesforce. They use Apache Iceberg to manage over 4 million tables and 50PB of data. This enables their data cloud to deliver multi-engine analytics at scale without sacrificing performance or governance.

How to Use Iceberg: Picking the Right Compute Engine

If Iceberg plays nicely with all these different engines, how do you decide which one to use? The trick is to think about the job you need to do. All your tools can point to the same source of truth using a single Iceberg table. Each engine has unique strengths depending on the nature of your data workloads, whether it’s batch processing, interactive analytics, or real-time streaming. Below are some of the common engine types and where they fit best:

- Batch Analytics (ELT/ETL): When it comes to large-scale data transformations and processing, Apache Spark remains the de facto standard and preferred engine. Spark’s distributed computation model is perfect for processing terabytes of data in batch jobs, such as nightly reporting or building machine learning features.

- Interactive SQL: When business analysts and data scientists need to run fast, ad-hoc queries to explore data, a high-performance distributed SQL engine like Trino (formerly PrestoSQL) or even Snowflake is ideal. These engines are optimized for low-latency queries and can provide a familiar SQL interface directly on top of your Iceberg tables.

- Streaming and CDC: Real-time data ingestion and CDC are crucial for operational analytics, pipeline monitoring, and near-real-time machine learning (ML) capabilities. Here, Apache Flink and Spark Structured Streaming stand out. These engines continuously process incoming streams and write to Iceberg tables in micro-batches or streaming mode.

Choosing and combining these engines can often require considerable engineering effort, but Nexla simplifies much of this complexity. Instead of setting up each engine manually, it provides a unified control plane. Using FlexFlows, Nexla can intelligently direct data through the best engine for the workload. FlexFlows is a low-code/Kafka-based pipeline type designed for high-throughput, streaming and batch data ingestion, transformation, branching, and multi-destination delivery, all without manual engine setup.

For heavy transformations, Nexla can automatically generate and manage a Spark ETL flow; for real-time ingestion, it uses its streaming capabilities. This helps you to switch compute logic smoothly while the underlying Iceberg table stays a stable, reliable endpoint.

CDC into Iceberg: The Foundation of a Real-Time Lakehouse

Change data capture identifies and captures the changes made in a source database, such as inserts, updates, deletes, and delivers them to a downstream system in real-time. It’s a critical pattern for keeping analytics systems synchronized with operational data.

Iceberg’s architecture, with its atomic snapshots and ACID transactions, is perfectly suited for handling CDC data streams. There are several patterns for applying CDC changes to a table:

- Append-Only (Change-Log): Every change event (insert, update, delete) is appended as a new row. This is simple but requires downstream consumers to reconstruct the current state of a record.

- Merge-on-Write (CoW): When an update or delete arrives, the data file containing the old record is read, the change is applied, and a new, updated data file is written. This makes reads very fast, as queries always see the latest state, but can be write-intensive.

- Merge-on-Read (MoR): Inserts are written to new data files, while updates and deletes are written to separate “delta” files. When the table is queried, the engine merges the base files and delta files on the fly to produce the current state of the table. This optimizes for fast writes but can add latency to reads.

Manually implementing these patterns is a complex and error-prone process. Nexla’s CDC support for Iceberg completely automates this process. Using its database, CDC connectors, or Kafka integration, Nexla can:

- Stream changes from sources such as PostgreSQL, MySQL, or Microsoft SQL Server.

- Automatically handle schema evolution. If a new column is added to the source table, Nexla detects it and seamlessly updates the target Iceberg table without requiring manual intervention or causing pipeline failure.

- Validate, cleanse, and monitor the incoming data stream to ensure data quality.

- Manage the merge logic (CoW or MoR) based on your performance requirements.

- Trigger auto-compaction and optimization. Nexla can automatically run maintenance jobs to compact small data files and prune old snapshots, ensuring the table remains performant over time.

Iceberg as a Source for Downstream Systems

A modern data architecture requires bi-directional data flow. Nexla turns Iceberg into a true two-way data hub, rather than merely a destination. It automates the full lifecycle, enabling ingestion of data from any source, including APIs, databases, and streaming platforms, into managed Iceberg tables.

At the same time, Nexla simplifies the use of Iceberg as a source.It lets your teams package up data from Iceberg tables and send it to any downstream application or system, such as customer-facing dashboards, machine learning pipelines, or operational databases. It establishes a continuous, automated loop of data movement by providing universal connectivity for both ingress and egress.

This transforms the lakehouse into a dynamic, self-service platform. Data moves freely in both directions, supporting everything from analytics to operational workflows without the usual engineering overhead.

Conclusion

Apache Iceberg is transforming data architecture with its flexible, decoupled storage and compute model, enabling teams to select suitable processing engines for each workload while maintaining a single, consistent data source. With features such as ACID transactions, schema evolution, and changelog views, Iceberg is favored for scalable data lakehouse systems.

Deploying Iceberg at scale can be operationally complex, but using the right platform, like Nexla, makes a significant difference. By combining Iceberg’s open table format with Nexla’s no-code data product platform, teams can:

- Seamlessly ingest batch and streaming data into Iceberg tables using the optimal compute engines.

- Leverage CDC pipelines for real-time updates with automated compaction, schema validation, and monitoring.

- Use Iceberg both as a sink and a source, powering downstream systems with live, incremental data.

If you’re ready to deploy and manage Iceberg with minimal operational overhead, request a Nexla demo today.