Why Context Engineering Is Key to the Next Era of Enterprise AI

In the News: betanews.com: In this Q&A, Saket Saurabh explains why context engineering is key to reliable, compliant, and intelligent enterprise AI workflows.

When a support chatbot quotes an outdated refund policy, it’s not a model flaw; it’s a context flaw: the system acted on stale and fragmented information.

According to recent AI adoption surveys, many organizations use AI, yet only a small minority run it at scale with measurable impact. The gap is not about model “intelligence” alone; it is about the quality and governance of the information we feed those models.

To address this deficit, organizations need a dedicated instrument. Context engineering is the systematic practice of designing and controlling the info an AI model consumes at runtime, ensuring outputs are accurate, auditable, and safe.

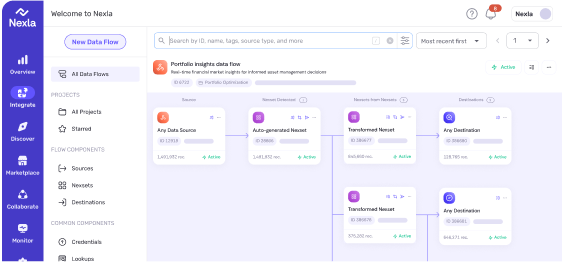

This article will explain why context collapses in enterprises, the three data pillars that prevent it, a minimal workflow, and how Nexla provides the foundation.

When an AI model generates a response, it’s synthesizing everything in its “context window”: the instructions, the conversation history, and any external information you provide. If that context is flawed, the output will be too.

Without a solid data foundation, context engineering fails in three predictable ways:

Recent industry surveys confirm that many enterprises have already absorbed financial losses. The solution is not just better prompts; it’s better data.

Context engineering is the discipline of designing and controlling the information an AI model consumes during inference time. It strategically assembles:

The goal is to craft a precise, relevant context for accurate AI outputs. However, this process assumes a critical foundation: the underlying data must be reliable, consistent, and governed. Without this, even sophisticated context engineering fails.

Beyond data quality and governance, context engineering must operate across a spectrum of data types. Enterprises rarely rely on structured tables alone, policies live in documents, customer feedback appears in text or voice transcripts, and operational evidence may include images or video feeds.

The ability to retrieve, normalize, and align these heterogeneous data sources under shared metadata and lineage controls is what differentiates a scalable AI foundation from an isolated proof of concept.

Whether data arrives as a JSON record or a scanned contract, it must inherit the same governance guarantees before entering the model’s context window.

For context engineering to work, the “knowledge” it retrieves must be built on a foundation of governed data. This requires three core capabilities:

For every piece of data an AI uses, you must be able to answer: Where did this come from? What transformations were applied? Who approved it?

Lineage creates an unbreakable audit trail from source to consumption. When an AI cites a revenue number, lineage guarantees you trace it back to the original transaction in minutes, not days. This is non-negotiable for transparency, as emphasized in frameworks such as the NIST AI Risk Management Framework.

Identity drift is a constant in enterprise systems. Customers change emails, products get new SKUs, and suppliers are re-keyed. Entity resolution creates a single, authoritative view of each business entity (customer, product, supplier). It uses canonical keys and survivorship rules to unify scattered identifiers. This ensures that when your AI looks up a “customer,” it gets a complete, unified profile, not a fragmented, contradictory mess.

Encode valid ranges, schema relationships, and policy rules (e.g., PII handling and RBAC) within the data flow. Validate during ingestion, transformation, and publication, and quarantine violations before they reach the model. This prevents hallucinations (incorrect outputs) and simplifies compliance.

Ensure data complies with business rules, including valid ranges, masked PII, and proper schema. By enforcing these constraints, bad data is caught early, preventing nonsensical inputs and easing compliance audits.

These pillars create the foundation for reliable context engineering, ensuring AI outputs are accurate, trustworthy, and compliant.

Building trustworthy AI is a sequential process. You can’t fix data at the context layer. Here is a practical workflow:

As AI systems move from development to deployment, the challenge shifts from building the workflow to keeping it alive. Context engineering must evolve from a static, batch process into a dynamic, continuously updated system that adapts as new information arrives. In production environments, AI decisions often depend on data that changes by the minute: inventory levels, sensor streams, and customer actions. Real-time context engineering ensures that retrieval and assembly pipelines are event-driven, updating context whenever the underlying facts change.

This enables AI systems to act on live, trustworthy information instead of stale snapshots. Nexla’s continuous data observability and automated policy enforcement make this real-time governance achievable without compromising traceability or compliance.

Teams often undermine AI initiatives through these common missteps:

Nexla does not just move data; it creates the governed, reliable knowledge base that context engineering requires.

The path to reliable enterprise AI is not found in more sophisticated prompt engineering alone. As large language models gain exponentially larger context windows, they can technically “see” more information at once. But visibility without curation only amplifies noise. The expansion of context windows makes governance even more critical: enterprises must decide what enters those windows, why, and under what lineage guarantees. The future of trustworthy AI will depend less on how much context a model can ingest and more on how precisely that context is engineered.

Context engineering assembles the pieces, but data governance ensures the pieces are sound. By investing in a foundation of governed data, with built-in lineage, resolved identities, and enforced constraints, you transform your data ecosystem from a liability into a trusted knowledge asset. This is what allows context engineering to fulfill its promise: turning AI from a potential source of hallucinations into a foundation of trust.

Context engineering is the systematic practice of controlling the information AI models consume at runtime. It matters because AI outputs are only as reliable as their inputs. Without proper context engineering, AI systems hallucinate, produce inconsistent results, and lack audit trails for compliance.

Context engineering prevents hallucinations by ensuring AI retrieves data from governed sources with verified lineage, unified identities, and quality controls. It uses three pillars: provenance tracking, entity resolution, and domain constraints to eliminate “context poisoning” where models present incorrect data as fact.

The three pillars are: (1) Provenance/Lineage for complete audit trails, (2) Entity Resolution to unify scattered identifiers into a single source of truth, and (3) Domain Constraints that validate data against business rules and policies before it reaches the AI model.

Prompt engineering optimizes how you ask AI questions. Context engineering governs the underlying data foundation AI draws from—ensuring knowledge bases are reliable, unified, and traceable through lineage, entity resolution, and metadata management. Better prompts can’t fix bad data.

Data lineage provides complete traceability for AI outputs, showing where data originated, what transformations occurred, and who approved it. This audit trail enables debugging, regulatory compliance, and stakeholder trust by allowing teams to trace any AI response back to its source in minutes.

RAG (Retrieval Augmented Generation) retrieves external data for AI responses. Context engineering governs what data RAG systems can access—ensuring retrieved information is accurate, traceable, and compliant through lineage tracking and entity resolution.

Yes. Prompt engineering optimizes queries, but context engineering governs the data foundation. Even perfect prompts fail if the AI retrieves outdated, fragmented, or ungoverned data. Context engineering ensures your knowledge base is trustworthy before any prompt is written.

Request a demo or download our metadata integration guide to see how Nexla’s governed data products provide the context your AI can trust.

In the News: betanews.com: In this Q&A, Saket Saurabh explains why context engineering is key to reliable, compliant, and intelligent enterprise AI workflows.

In this episode of Software Engineering Radio, listen how the Model Context Protocol is transforming AI system architecture, and empowering developers with agentic workflows

In the News: Saket Saurabh, Nexla CEO, explains why enterprise AI’s future lies in context engineering: building the right data, memory, and workflows for smarter, more reliable AI.