Why do GenAI apps need reusable data products? Enterprise GenAI requires context from both structured databases (customers, orders, entitlements) and unstructured sources (PDFs, logs). Reusable data products unify these with join-aware retrieval, metadata controls, and governance—preventing context gaps that cause 30% of GenAI projects to fail after proof-of-concept.

Introduction

The fastest way to lose trust in a GenAI assistant is a right-sounding answer built on the wrong context.

The correct answer rarely resides in a single place in enterprise settings. It usually spans multiple sources, and you can only get it by integrating structured data from databases with unstructured evidence from PDFs and logs.

When teams skip that foundation at scale, GenAI apps ship with gaps in context, access control, and traceability. That is why a forecast suggests 30 percent of generative AI projects will be abandoned after proof of concept, often tied to data quality and risk controls that do not survive production.

This article explains the reusable data product pattern and demonstrates one implementation using Nexsets, a Common Data Model, and governed data flows.

Context Requires Joins, Not Chunks

RAG discussions often focus on chunking, but enterprise context is relational.

Structured systems provide stable keys, customers, orders, entitlements, SKUs, and tickets. They define eligibility, ownership, and time. Unstructured sources provide rules and evidence, such as policy PDFs, contracts, runbooks, transcripts, and audit logs.

Retrieval that ignores joins among these data types returns relevant text without the right eligibility, version, or timeline context. For example, a customer requests a refund on day 45. The assistant retrieves a clause that allows refunds for up to 60 days, but it applies only to premium plans and only after a policy update that took effect last quarter. The answer sounds correct, but it is wrong for that customer and wrong for that date.

The goal is not to abandon retrieval. It is to make retrieval join-aware so that each chunk can be filtered by eligibility, time, and policy version, and then stitched back into the structured record to make it actionable.

A refund decision shows the pattern. You need the customer entitlement record, the policy clause that matches that plan and its effective date, and the incident log window showing what triggered it and when.

Why Index First RAG Breaks in Production

Usually, teams start with the index when building RAG systems. They push documents and logs into a vector store, add basic chunking, and rely on query time retrieval to reconstruct the missing joins. It is fast for a demo. It is fragile for production for the following reasons.

First, the drift is constant. Schemas evolve, document templates change, and log formats shift. Jobs may still run while the retrieval quality degrades. Sections shift, metadata drops, and embeddings no longer represent what you expect.

Second, duplication follows. Every team rebuilds parsing, chunking, metadata conventions, and refresh logic per app. One assistant indexes PDFs by page, another by header, and a third by paragraph. Now you have multiple versions of the same policy, each refreshed on a different schedule.

Third, access gaps appear. Source permissions do not reliably carry into indexes. If you simplify access control, you risk overexposure. If you lock retrieval down, you miss necessary context.

Finally, you lose defensibility. Without versioning and lineage, teams cannot explain why an answer was produced, which policy version was used, or which records were joined.

Four Ways Index-First RAG Fails in Production

| Failure Mode |

What Happens |

Why It Happens |

Production Impact |

| Constant Drift |

Retrieval quality degrades silently |

Schemas evolve, document templates change, log formats shift without detection |

Jobs run but return wrong context; teams notice only after dashboards break |

| Duplication Explosion |

Each team rebuilds same logic differently |

No standard for parsing, chunking, or metadata conventions |

Multiple policy versions indexed differently; conflicts in answers |

| Access Control Gaps |

Source permissions don’t transfer to indexes |

Simplifying access risks overexposure; locking down misses context |

Compliance violations or insufficient retrieval |

| Lost Defensibility |

Cannot explain why answer was produced |

No versioning, lineage, or policy version tracking |

Unable to audit, debug, or justify GenAI outputs |

Blueprint for a Reusable RAG Data Product

A reusable RAG data product is a named contract that unifies entities, document sections, and log events into a consistent structure to address the above challenges. This lets the RAG system retrieve joinable context instead of only pulling semantically similar text.

To make that work reliably, start with a stable internal model. A Common Data Model defines canonical entities and relationships so entitlement, policy clause, and incident event mean the same thing across sources.

Then add retrieval-grade metadata, such as owner, effective dates, freshness, sensitivity, domain tags, and stable IDs. This is how retrieval selects the correct version and avoids restricted content. Treat metadata as continuously captured, not manually curated, and build on active metadata practices, such as the role of metadata.

Next, build guardrails into the product. Validation, versioning, lineage, and Role-Based Access Control (RBAC) turn an answer into something explainable. If a clause has an effective date, enforce it. If a log event is malformed, quarantine it. If a user is not authorized, the join should not happen.

Preserve meaning during preparation. PDFs should be split into sections and clauses. Logs should be normalized into events with consistent fields. Both should be tied to stable IDs so they can join back to the database truth.

Finally, publish once and reuse everywhere. Multiple RAG apps and agents should consume the same governed product instead of rebuilding the same logic in parallel.

How Nexla Builds Governed Data Products

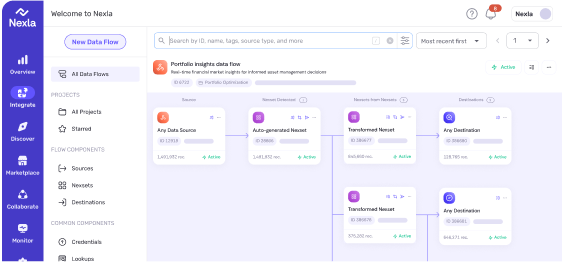

Nexla implements this pattern by treating data products as first-class assets rather than one-off pipeline outputs.

It starts with Nexsets, which package structured and unstructured data with schema, validation, access control, and auditability, so downstream consumers see a consistent shape even when upstream formats and protocols vary.

Next, the Common Data Model aligns semantics across sources. You define the internal contract once and map each source to it, rather than teaching every application how to interpret every source.

Then data flows deliver the same governed product to where it needs to go, including vector stores, warehouses, and operational apps, without rebuilding for each destination.

For data ingestion, Nexla’s connectors help bring databases, file systems, and APIs into a single, governed pattern. The key point is that reuse and governance travel with the product, not rebuilt per pipeline.

Next Step Productize One Decision

Stop treating context as a one-off index and start treating it as a product.

Pick one high-value decision that requires DB plus PDFs plus logs, such as refund eligibility, entitlement checks, contract exceptions, or incident-driven credits.

Standardize it into a governed Nexset backed by the CDM and delivered through flows. Once that decision is correct and auditable, expanding to adjacent decisions becomes a mapping exercise rather than a reinvention.

Want to Move GenAI Beyond Proof-of-Concept to Production?

Schedule a demo to see how Nexla creates governed, reusable data products that unify databases, PDFs, and logs with join-aware retrieval, metadata controls, and lineage tracking—powering production-grade GenAI applications

FAQs

Why does GenAI need both structured and unstructured data?

Structured databases provide stable keys, eligibility, and ownership (customers, orders, entitlements). Unstructured sources provide rules and evidence (policy PDFs, contracts, logs). GenAI answers require joins between both—like matching a customer’s entitlement record to the policy clause version that was effective on the transaction date.

What is join-aware RAG retrieval?

Join-aware RAG retrieval filters chunks by eligibility, time, and policy version before stitching them back to structured records. This prevents retrieving semantically similar text that applies to the wrong customer, wrong plan, or wrong effective date—the most common cause of incorrect GenAI answers in production.

Why does index-first RAG break in production?

Index-first RAG breaks because: (1) schemas and document formats drift without detection, (2) each team rebuilds parsing and chunking logic differently, creating version conflicts, (3) source permissions don’t carry into indexes, creating access gaps, and (4) without lineage and versioning, teams can’t explain which policy version produced an answer.

What makes a data product “reusable” for GenAI?

Reusable GenAI data products include: standardized schemas with a Common Data Model, retrieval-grade metadata (owner, effective dates, freshness, sensitivity), validation and versioning for explainability, preserved meaning (PDFs split into clauses, logs normalized to events), and governed delivery to multiple RAG apps without rebuilding logic.

How does a Common Data Model improve GenAI context quality?

A Common Data Model defines canonical entities and relationships so “entitlement,” “policy clause,” and “incident event” mean the same thing across all sources. This eliminates semantic ambiguity, enables reliable joins between structured and unstructured data, and prevents different teams from interpreting the same concept differently.

How do Nexsets enable reusable data products for GenAI?

Nexsets package structured and unstructured data with schema, validation, access control, lineage, and auditability. They enforce Common Data Model standards, preserve meaning during preparation (PDF sections, normalized log events), and deliver governed products to multiple destinations—vector stores, warehouses, apps—without rebuilding per pipeline.