Most data teams spend their days putting out fires instead of driving insights. A tiny schema change can break reports, send engineers scrambling, and stall projects. Over time, that chaos starts to feel normal. In reality, data downtime and endless maintenance slow progress and carry heavy costs when the information is incomplete or inaccurate.

AI-powered transformation gives teams a break from brittle pipelines. It can detect schema drift, learn patterns, and adapt faster than hard-coded logic. However, it only works when a clear structure and simple rules guide it.

One way to ensure such consistency is to utilize Nexla’s Common Data Model (CDM), which provides a structured framework, maintains transformations, and enables robust governance. It is also reusable across analytics and machine learning, giving AI systems a solid foundation to perform effectively.

In this article, we will look at how data transformation approaches have evolved. We will also go over how Nexla’s CDM can reduce manual efforts and streamline the process.

Limitations of Data Transformation Approaches

Over time, teams have turned to ETL, ELT, and now AI-driven methods to manage data. While each step forward solved some problems, none came without tradeoffs.

Traditional ETL and ELT

Extract, Transform, and Load (ETL) has been the default for years. Teams extract data, transform it into a clean and consistent format, and then load it into a warehouse or data lake. The benefit is control. You define the rules up front. Pipelines run the same way every time. In stable systems, this works well.

In practice, data environments are rarely stable. Even a small change upstream, like renaming a field or adding a new type, can break jobs and stall reports. Teams then spend hours patching and retesting just to get things running again.

Extract, Load, and Transform (ELT) emerged to add flexibility. You load first, then transform inside the destination engine. This approach provides downstream teams with faster access to raw data and the flexibility to tailor it to their specific needs. It fits modern lakes and lakehouses that support schema on read.

The tradeoffs are real. ELT pushes governance and privacy to the end. Teams must enforce masking, Role-Based Access Control (RBAC), and audit trails in the warehouse. As sources and teams grow, that enforcement becomes more complex.

Both models are helpful, yet they struggle to keep up with change. Those limits show up every day for data teams. Here are the common pain points.

Pain Points in Day-to-Day Operations

Even with ETL or ELT in place, teams face recurring challenges that slow them down:

- Small upstream changes break reports and jobs. A renamed field or type can stop pipelines and force quick fixes.

- The same concept appears under different names across various systems. This fragmentation leads to inconsistent labels, confusion, and extra mapping work.

- Data arrives in various formats, including CSV, JSON, XML, and SQL. Each requires separate handling and slows teams down.

- Most annotation is still performed manually. It takes time, adds cost, and mistakes slip in, hurting model accuracy and slowing adoption.

- Fixes require manual rules, reruns, and testing. This cycle repeats often and drains time.

- Access and privacy rules drift between tools, which creates gaps, complicates audits, and puts compliance at risk.

These challenges highlight the need for pipelines that can automate repetitive tasks and adapt dynamically as data changes.

Data Flows: A Step Toward Flexibility

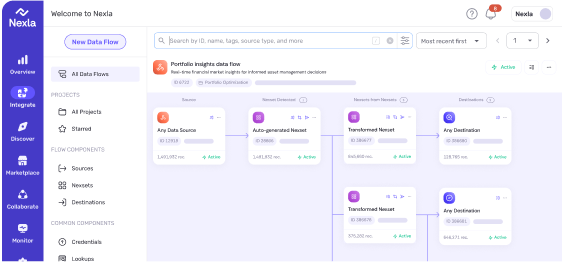

To address these operational bottlenecks, many teams are turning to data flows as a more flexible alternative to traditional pipelines. In Nexla, a Data Flow is a reusable, governed unit that works across any source or destination, beyond just orchestrated task graphs.

Instead of building one-off connections for each source, the flows package the common steps into reusable pieces. When a schema update is applied, it helps multiple teams rather than just one. Analysts also get quicker access because they can use approved flows without waiting for engineers to rebuild jobs. This is a self-service, governed approach that reduces maintenance and speeds up onboarding of new sources.

Still, data flows are only part of the solution. They reduce the repetitive work, but they do not adapt on their own. Mappings still need review. New fields still need approval. While flows lighten the load, they do not fully address constant change. That is where AI and a Common Data Model take the next step, making pipelines easier to manage and truly adaptive.

What AI Changed

AI pushes pipelines beyond static code. It can detect schema drift in real time, propose transformations automatically, and apply fixes faster than human intervention. This signals a shift toward data-centric AI, where fixing and improving data carries as much weight as refining the model.

Large language models extend this further. They can generate transformation rules, infer mappings, and repair inconsistencies from just a few examples. Early results show that they can handle diverse wrangling tasks, including reformatting, entity matching, and deduplication, directly from prompts.

The result is a shift from brittle, rule-heavy pipelines to adaptive systems that continuously learn. Instead of waiting for humans to patch jobs after they fail, the pipeline itself proposes and validates the changes as new data comes in.

Challenges with AI Alone

AI cannot fully manage data transformation on its own. Some of the issues with AI alone are as follows:

- Automated mapping often fails when schemas are unclear or when different systems use different names for the same entity, resulting in inconsistent results.

- Ground truths are scarce. With few reliable labeled examples, models struggle to learn the correct patterns. They may work on one dataset but fail to generalize when applied to new or evolving sources.

- Pipelines lose accuracy as data evolves. Without monitoring and retraining, schema and data drift steadily degrade performance.

- Governance is still a risk. Without proper guardrails, automation can bypass compliance checks, access controls, or masking rules. That creates inconsistency and weakens trust in the data.

This shows why AI alone is not reliable. Automation needs a proper framework to ensure consistency and reliability.

Nexla Common Data Model

The Common Data Model (CDM) closes the gaps that make AI-driven pipelines unreliable. It defines a shared standard that every source maps into, so new data arrives in a structure that’s already trusted across the organization. AI-generated mappings also adhere to these same rules, thereby maintaining governance integrity.

As data evolves, the CDM prevents drift and maintains consistent formats. Once converted into CDM format, datasets can be reused across teams and domains without needing to rebuild logic each time. That reduces duplication, speeds up delivery, and ensures analytics, reports, and downstream systems always start from the same reliable base.

How Nexla CDM Powers Data Transformation

Nexla’s CDM standardizes structure and enhances transformation with built-in intelligence. Here’s how:

- AI-driven ingestion detects file formats and proposes mappings, making setup faster.

- Versioning and audit tracks every change and logs validation, so you always know what changed and why.

- Drift monitoring watches for schema or content shifts, with options to auto-fix or quarantine suspect records.

- Reusability is baked in. Once a dataset conforms to the CDM, it can be routed through any one of 500+ connectors.

- Human-in-the-loop controls enable teams to approve changes, maintain traceability, and enforce privacy safeguards, such as PII masking.

Next Steps for Adaptive Data Transformation

Data transformation is not about fixing jobs after they break. It is about building pipelines that adapt on their own. Nexla’s CDM provides AI with the structure it needs and teams with a standard they can trust. With CDM :

- Reduce manual work with automated mapping and schema fixes.

- Keep transformations consistent and reusable.

- Start small by connecting a few sources.

- Scale by refining once and reusing everywhere.

Get Nexla’s CDM for building scalable AI-based transformation pipelines.