Nexla and Vespa.ai Partner to Simplify Real-Time AI Search Across Hundreds of Enterprise Data Sources

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Poor data quality costs organizations around 15% to 25% of their operating budget. This is because low data quality includes errors, duplicates, and inconsistent formats that lead to wrong insights and compliance risks.

Robust ETL (Extract, Transform, Load) processes overcome these issues by moving data from different sources and preparing it for analysis. But when done manually, ETL can introduce errors and delays that lower trust in the results.

Automated ETL tools can change that. These tools reduce human error, enforce data standards, and ensure consistent outputs across systems. Platforms like Nexla make this process practical and scalable by connecting any source, validating and transforming data, and delivering reliable datasets for teams.

In this article, we’ll look at how automated ETL improves data quality and consistency and why it matters for business success. We will also discuss how modern solutions make it possible.

Businesses collect data from many places like websites, APIs, customer systems, cloud apps, internal databases, and external partners. With so many formats, errors and missing information are common. That is why 67% of organizations say they do not fully trust their data when making decisions.

Manual fixes only make things harder. Teams often rely on spreadsheets or custom scripts, which are slow and repetitive. Each correction can create new mistakes, and fixes are rarely applied the same way across different systems. The result is multiple versions of the same data, messy pipelines, and reporting that no one can fully rely on. Compliance risks also increase, while teams spend most of their time just keeping data usable.

Poor-quality data affects more than reporting. It reduces the value of analytics dashboards, AI models, and automation tools. To get the full benefit of these technologies, companies need scalable ways to manage and standardize their data.

Solving these problems does more than clean up errors. It improves decision-making, ensures compliance, and builds customer trust.

Strong data quality and consistency bring direct business value. Here are some of the benefits of high-quality data for businesses:

Traditional ETL has served organizations well for many years. It extracts data from multiple systems, applies business rules to transform it, and then loads it into a central database or warehouse. The process standardizes formats, removes duplicates, and enforces consistency across datasets. As a result, organizations have been able to place greater trust in their reports and analytics.

However, as data sources have grown more varied and volumes have increased, traditional ETL has struggled to keep pace. Their limitations include:

Due to these limitations, many companies find themselves spending more time fixing data pipelines than using the data to drive insights. This is why automation has become so important in modern ETL processes.

Traditional ETL requires manual effort to design, code, and maintain data pipelines. Automated ETL tools build on the same foundation but go further by reducing the amount of manual work required at every stage. They use built-in connectors, pre-configured transformations, and intelligent monitoring to streamline the flow of data from source to destination.

Automated ETL tools make the whole data standardization process faster and easier. They reduce the need for manual work by handling tasks like:

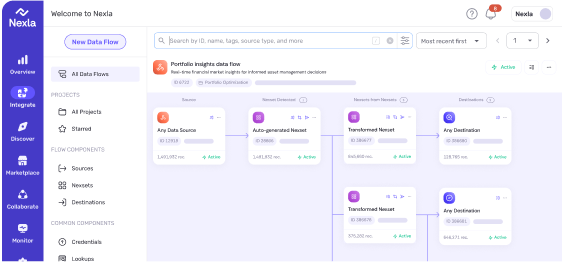

Platforms like Nexla and Fivetran are widely used for automation. Nexla stands out with its no-code and low-code design. It allows both technical and non-technical users to build data flows. It also offers reusable data products and built-in monitoring to ensure ongoing data quality and consistency.

Automated ETL tools are designed to enforce standards and ensure data reliability across the company. They address the weaknesses of manual ETL by combining automation, monitoring, and repeatability. Here is how automated ETL tools improve data quality and consistency:

Automated ETL tools simplify data standardization by offering pre-built transformations and validation rules. They ensure consistent formats, like aligning date formats or naming conventions, across all datasets. This consistency makes data integration easier and helps improve the accuracy of analytics.

Manual handling often leads to errors, but automated ETL minimizes this risk by validating data as it flows in. Many tools include built-in quality checks that can identify duplicates, missing values, or outliers, while some even apply anomaly detection to spot unusual patterns during ingestion. This safety net helps increase trust in the data.

Data lineage is important for compliance and auditing. Automated ETL tools document each step of the transformation process automatically. This makes it easy to trace the creation of a dataset. It also mentions the sources that were used and what rules were applied. Better traceability helps teams to meet regulatory requirements and improves accountability.

Modern ETL automation includes continuous monitoring of pipelines. The system raises an alert as soon as there is a failure or an unexpected change. Some tools also track data drift, where values shift over time and impact accuracy. Early detection of problems can help prevent poor-quality data from reaching business reports or machine learning models.

Manual processes struggle to keep up with growing data volumes, but automated ETL tools are designed to handle large workloads effortlessly. Once rules are defined, they can be applied repeatedly to new datasets with the same consistency. This repeatability ensures accuracy and efficiency at scale.

Automated ETL tools solve many data problems, but adoption still comes with challenges. Organizations need to plan carefully to get the full benefits.

Adopting automated ETL works best when there is a clear plan. The following best practices can help organizations avoid common pitfalls and get more value from their tools.

Nexla is built for automated ETL and ELT. It helps teams deliver clean, consistent data without heavy coding or complex maintenance. Here are a few ways it helps:

Automated ETL is changing fast as new technologies improve how data is prepared and managed. The shift is from basic automation to smarter and more flexible systems.

AI and machine learning are increasingly central to data preparation. These technologies can identify patterns, detect anomalies, and even recommend transformations automatically. This reduces manual setup and makes pipelines more adaptive over time.

With the growth of cloud data warehouses like Snowflake and BigQuery, many organizations are shifting from ETL to ELT. Instead of transforming data before loading, raw data is loaded first and transformed inside powerful cloud platforms.

Modern automated ETL tools connect directly with cloud warehouses and lakehouses. This allows teams to unify structured and unstructured data in one place. It also makes analytics more flexible and comprehensive.

Data observability platforms are becoming an important part of ETL pipelines. They monitor data health, freshness, and reliability across the lifecycle. This trend suggests that automation alone is insufficient and that visibility and trust are just as critical for long-term success.

Automated ETL tools play a key role in delivering high-quality and consistent data. With clean and reliable data, organizations can make better decisions and build trust with customers.

Adopting automation gives businesses a strategic advantage. Teams can focus on insights rather than manual fixes, and data pipelines can keep pace with growth and evolving business needs.

To achieve this, companies need a platform that not only automates workflows but also enforces data quality and scales as demand grows. Nexla can help automate and standardize your data pipelines. Schedule a demo today and see how trusted data can drive faster decisions and better outcomes.

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Nexla and Vespa.ai partnership eliminates data integration complexity for AI search and RAG applications. The Vespa connector delivers zero-code pipelines from 500+ sources to production-grade vector search infrastructure.

Reusable data products unify databases, PDFs, and logs with metadata, validation, and lineage to enable join-aware RAG retrieval for reliable GenAI applications.