Nexla and Vespa.ai Partner to Simplify Real-Time AI Search Across Hundreds of Enterprise Data Sources

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

You need a clear operational strategy to run reliable and fast data analysis pipelines on Apache Iceberg. That strategy must cover data versioning, schema evolution, catalog selection, and automated table maintenance to ensure performance and consistency at scale.

In this article, we walk through operational benefits, like time travel, easy schema changes, and safe handling of deletes and updates.

We’ll discuss catalog options from simple file-system catalogs to more advanced ones like Nessie and REST. Lastly, we will wrap up with best practices for maintaining your data, like reducing data size and cleaning up metadata, and show how Nexla can help you manage everything smoothly.

Apache Iceberg solves the persistent problems found in traditional data lakes built on formats like Hive. It adds a strong layer of metadata and features that make managing large datasets more reliable and straightforward. Here are the key features that set Iceberg apart:

Iceberg’s reliability mainly comes from its use of snapshots. Every change you make to an Iceberg table, whether it’s adding data, deleting rows, or changing the schema, creates a new, unchangeable snapshot.

Each snapshot is a complete view of the table at a specific moment in time and points to a metadata.json file. This file keeps a history of all the data files, manifests, and schema information for that version.

This design makes “time travel” possible because you can query the table exactly as it was at any previous snapshot. This capability opens up several important use cases.

Making changes to the structure of a table or its partitioning scheme used to be risky in traditional data lakes and often required rewriting the entire table. Iceberg solves this by allowing such changes to be made easily through its schema and partition evolution features.

Since the schema is stored separately for each snapshot in the metadata, you can add, remove, rename, or reorder columns safely without rewriting existing data files. Older data is read with its original schema, and new data uses the updated schema. Iceberg handles this smoothly when you run queries.

Similarly, Iceberg partition evolution lets you change how a table is partitioned (divided into parts) as your data and query patterns evolve. For example, you might initially divide user data by month, but later decide that dividing by day works better.

Iceberg allows you to update the partition rules without rewriting old data. The system uses metadata to understand old and new partition schemes and only reads the relevant data, a process called hidden partitioning.

Early data lakes faced a major issue because modifying or removing individual records required reprocessing and rewriting large parts of the table, which was slow and error-prone. Iceberg addresses this by bringing full ACID (Atomicity, Consistency, Isolation, Durability) compliance to the data lake.

Now, with Iceberg, you can delete, update, or merge data at the row level. When a row is deleted, Iceberg doesn’t actually remove the original data. Instead, it creates a delete file that records which rows should no longer appear.

When you query the table, the query engine reads both the main data files and the delete files. It excludes any rows marked as deleted. This approach ensures the table always reflects the most accurate and up-to-date view of the data, without physically rewriting large data files. It also supports advanced use cases like Change Data Capture (CDC) and compliance-driven deletion (e.g., for GDPR).

Query engines had to look through all files in a directory to find the partitions in old Hive tables, which made the process slow for large tables. Users also had to specify partition columns in their queries to get better performance.

Iceberg simplifies this with hidden partitioning. Instead of relying on directory structures, Iceberg tracks partition values in manifest files, which are part of its metadata layer. When a query runs, the engine consults these manifest files to quickly identify which data files are relevant and skips over the rest. This cuts down the amount of data that needs to be read, without requiring the user to understand the physical layout of the table.

Any processing engine that supports Iceberg can read from and write to its tables correctly. This means you can have a Spark job processing data, a Flink pipeline analyzing data in real time, and a Trino cluster providing ad-hoc SQL access for analysts. These systems all operate on the same Iceberg tables, ensuring a unified data view across engines.

This ability to work across multiple engines is important for building a flexible data system that’s easy to update and less dependent on a single vendor.

While Iceberg’s metadata files track the state of a table, the catalog tells query engines where to find the current metadata file for a given table name. It acts as a guide that connects a table’s name to the location of its latest metadata file.

More importantly, the catalog maintains data consistency. When you commit a change to a table, the catalog must swap its pointer from the old metadata file to the new one. This prevents errors and ensures all users and engines see the same data view at the same time.

Choosing the right catalog affects how well the system scales, handles transactions, and manages data governance. Let’s explore the most common options:

Hadoop Catalog is the simplest option that stores table metadata directly in the file system (like HDFS or S3) alongside the data.

This catalog is a common choice since it uses the Hive Metastore (HMS) service as the central catalog.

For people who use AWS a lot, the Glue Data Catalog is an easy-to-use, managed alternative to running their own Hive Metastore.

Uses a relational database (like PostgreSQL or MySQL) via a JDBC connection to store a track of table information.

The REST Catalog is a standard REST API specification that any service can use to act as an Iceberg catalog.

Nessie is a free tool to organize and manage data in a way similar to how Git works for code. It is considered one of the most advanced tools of its kind.

The table below summarizes the operational trade-offs between the Iceberg catalog options discussed above.

| Catalog | Atomic Writes | Multi-Table Support | Versioning | Setup Complexity | Cloud Native | Notes |

|---|---|---|---|---|---|---|

| Hadoop | Yes (limited) | ✗ | Limited | Low | ✗ | Local FS-based |

| Hive Metastore | Yes | ✗ | ✗ | Medium | ✗ | Most compatible |

| AWS Glue | Yes | ✗ | ✗ | Low | ✓ | Best for AWS users |

| JDBC | Yes | ✗ | ✗ | High | ✗ | Needs DB expertise |

| REST | Yes | ✓ | ✓ | High | ✓ | Decoupled, scalable |

| Nessie | ✓ | ✓ | ✓ | High | ✓ | Git-style governance |

When Iceberg tables are created and data pipelines are operational, you need to perform regular maintenance to keep them running smoothly and save costs. These maintenance tasks also need to be scheduled and automated.

When data is ingested in small chunks frequently, such as with streaming data or CDC, the system ends up with many small data files. This slows query performance because engines have to open and check metadata for a large number of files. Compaction is the process of rewriting these small files into larger ones, usually between 128 MB and 1 GB, to improve performance.

Each change creates a new snapshot of the data. Over time, a table can accumulate thousands of snapshots and related metadata files. Regularly expiring old snapshots that are no longer required for time travel and cleaning unused metadata helps keep the system fast and reduces storage costs.

Sometimes, after a failed write, data files get stored but are not linked to any snapshot. These are called orphaned files. Periodically looking for and deleting these files prevents unnecessary storage use.

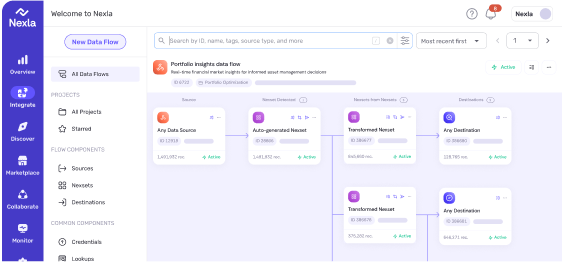

Maintenance tasks discussed above can be run as Spark or Trino jobs, but managing their scheduling and orchestration manually can be a lot of work. This is where a comprehensive data operations platform like Nexla truly helps. Nexla takes away the complexity in managing Iceberg’s data system, so you can focus on creating data products instead of plumbing with pipeline configuration.

Nexla supports popular Iceberg catalogs such as AWS Glue, Apache Polaris (a REST-based system), and Databricks Unity Catalog. This gives the flexibility to connect, transfer data, or operate across different systems as your needs evolve.

Once connected, Nexla manages the full process for your Iceberg datasets:

Apache Iceberg provides the base needed to build the modern data lakehouse. Its features include tracking data changes over time, flexible data evolution, reliable transactions, and support for multiple tools. This helps address the common issues that held back earlier data lakes.

To get the full benefits, you need a solid operational plan that starts with selecting the right catalog based on your data scale, governance rules, and transactional needs.

The Nexla Data as a Product platform automates key operational tasks, including data cleanup, snapshot management, schema updates, and catalog migrations. With built-in automation and Iceberg-native orchestration, your team can move faster without compromising on governance or reliability.

Ready to make your data lakehouse truly production-grade? See how Nexla helps teams streamline Apache Iceberg operations.

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Nexla and Vespa.ai partnership eliminates data integration complexity for AI search and RAG applications. The Vespa connector delivers zero-code pipelines from 500+ sources to production-grade vector search infrastructure.

Reusable data products unify databases, PDFs, and logs with metadata, validation, and lineage to enable join-aware RAG retrieval for reliable GenAI applications.