Nexla and Vespa.ai Partner to Simplify Real-Time AI Search Across Hundreds of Enterprise Data Sources

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

As enterprises increasingly adopt Generative AI (GenAI), the focus is shifting from proof-of-concept experiments to deploying mission-critical applications at scale. 2025 will be pivotal as organizations tackle the challenges of scaling and productionizing GenAI solutions to deliver real business impact.

While recent industry moves, such as Snowflake’s acquisition of Datavolo, highlight the critical need for robust data integration in this landscape, the broader story is about addressing the foundational challenges that GenAI adoption faces. Performance, scale, cost, risk, and control must all be optimized before enterprises can confidently operationalize GenAI applications.

At the core of scaling GenAI is the pressing challenge of data integration. Existing frameworks, plagued by latency at scale, high costs, and inadequate compliance, require a complete overhaul to meet the demands of diverse data sources such as warehouses, lakes, vector databases, SaaS platforms, and live streams. These datasets—spanning internal, third-party, and open-source—highlight the urgent need for seamless integration to drive advanced AI functionalities.

While mature general-purpose LLMs like OpenAI, Anthropic, and Llama are readily available, their enterprise value lies in retrieving and utilizing domain-specific data from disparate sources effectively.

This requires two types of pipelines:

The ability to seamlessly integrate and orchestrate these pipelines is essential for enterprises to unlock the full potential of GenAI.

The key value proposition of GenAI for businesses lies in its ability to process disparate data, enabling models to generate actionable insights or predictions at the time of use.

Organizations must ensure that data—regardless of its format or location—is accessible to GenAI applications in a consistent and governed manner. Beyond ingestion and retrieval, this involves streamlining connections to various systems, managing costs, ensuring compliance, and optimizing performance across the entire data lifecycle. Without addressing these complexities, even advanced GenAI models will fall short of delivering reliable, enterprise-grade outcomes.

Looking ahead to 2025, prepare for even more activity as GenAI remains hot, with the focus shifting to creating production-grade GenAI solutions, ultimately creating a need to solve complex integrations and orchestration. This will transcend activity by only large corporations—be on the lookout for innovation to continue rolling out at the intersection of data integration and GenAI technologies.

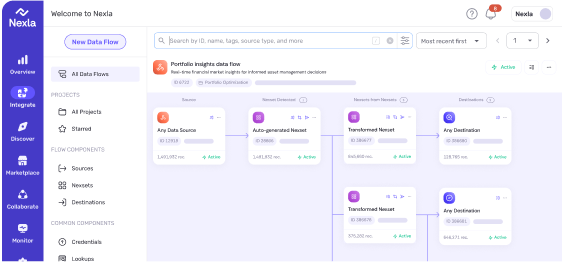

Nexla, along with other innovators, is pioneering a unified data integration platform designed for the GenAI era. Their unique data model, Nexsets, enables large language models (LLMs) to process data quickly and at scale, irrespective of data source format or context. This model enhances GenAI’s ability to perform accurate inferences, ensuring controlled costs and access, offering capabilities previously unattainable in data integration and GenAI applications.

Schedule a demo and experience the power of hardware-accelerated GenAI tailored to your business needs.

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Nexla and Vespa.ai partnership eliminates data integration complexity for AI search and RAG applications. The Vespa connector delivers zero-code pipelines from 500+ sources to production-grade vector search infrastructure.

Reusable data products unify databases, PDFs, and logs with metadata, validation, and lineage to enable join-aware RAG retrieval for reliable GenAI applications.