Nexla and Vespa.ai Partner to Simplify Real-Time AI Search Across Hundreds of Enterprise Data Sources

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Taking a Retrieval-Augmented Generation (RAG) solution from demo to full-scale production is a long and complex journey for most enterprises. At the core are two challenges. First, scalable ingestion pipelines that can prepare vast amounts of unstructured and structured data for GenAI. Second, performant RAG pipelines that orchestrate multiple algorithms and LLMs to deliver quality answers with security and governance. Nexla and NVIDIA have joined forces to address these challenges, making it easy to create enterprise-grade pipelines that are GPU-accelerated bringing tremendous gains in productivity, performance, and cost while being future-proof.

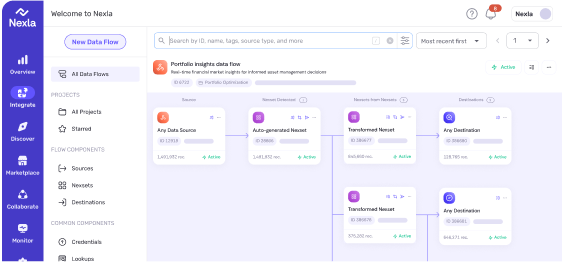

Enterprises often deal with millions of documents, images, and videos stored across platforms such as SharePoint, FTP, S3, and Dropbox. In addition, there is rich data in enterprise databases, applications, and services. Efficiently transforming all this data into actionable insights using RAG workflows can be challenging. Nexla’s no-code/low-code platform provides a robust solution for making any data GenAI Ready, while NVIDIA’s hardware-accelerated inference microservices (NIM) boost the speed of every pipeline stage including document parsing, Optical Character Recognition (OCR), embedding generation, re-ranking, LLM execution, and more. Together, Nexla and NVIDIA streamline the implementation of RAG workflows that allow enterprises to scale data ingestion and optimize retrieval.

With Nexla’s platform, businesses can ingest data from diverse sources, choose embedding models with a single click, and leverage NVIDIA NIM for fast and accurate query optimization. This makes scaling RAG solutions simple, helping businesses build trust and confidence to promote their GenAI apps to production.

Nexla’s CEO, Saket Saurabh, emphasizes the significance of this collaboration:

“Scaling generative AI from demos to production-grade solutions is a big challenge for enterprises. Our collaboration addresses this by integrating NVIDIA NIM into Nexla’s no-code/low-code platform for Document ETL, with the potential to scale multimodal ingestion across millions of documents in enterprise systems, including SharePoint, SFTP, S3, Network Drives, Dropbox, and more.”

Saurabh adds, “Nexla will support NIM in both cloud and private data center environments, helping customers accelerate their AI roadmap.”

Nexla’s integration of NVIDIA NIM microservices into its no-code/low-code platform empowers enterprises to accelerate their RAG workflows and overall GenAI roadmap. The combination of Nexla’s data ingestion capabilities together with NVIDIA’s hardware acceleration ensures businesses can quickly move from demo solutions to production-grade AI.

By streamlining multimodal data extraction and retrieval through advanced RAG workflows, Nexla and NVIDIA enable organizations to unlock insights from vast data stores, enhancing efficiency and building trust and confidence in decision-making. Whether the data resides in PDFs, images, or complex tables, Nexla and NVIDIA simplify the process, allowing enterprises to harness the full potential of GenAI.

Discover how Nexla and NVIDIA can help your enterprise scale GenAI applications from demo to production.

Schedule a demo and experience the power of hardware-accelerated GenAI tailored to your business needs.

Nexla and Vespa.ai partner to simplify real-time enterprise AI search, connecting 500+ data sources to power RAG, vector retrieval, and AI apps.

Nexla and Vespa.ai partnership eliminates data integration complexity for AI search and RAG applications. The Vespa connector delivers zero-code pipelines from 500+ sources to production-grade vector search infrastructure.

Reusable data products unify databases, PDFs, and logs with metadata, validation, and lineage to enable join-aware RAG retrieval for reliable GenAI applications.