How do you scale customer data integration? Standardize raw customer API and CSV feeds into reusable data products using a Common Data Model. Map each customer feed once to canonical entities (orders, customers, events), then deliver standardized outputs to multiple downstream systems without rebuilding pipelines.

AI adoption is widespread today, but there is often a disconnect between ambition and reality. Nearly two-thirds of organizations remain in the piloting stage rather than running AI at an enterprise scale. The root cause is rarely the algorithms themselves but rather the friction in data integration and governance.

This friction prevents reliable and production-ready outcomes. The challenge becomes even more complex when dealing with external customer data. Trust and consistency are difficult to maintain when data comes from outside your organization, leading to 70% of AI projects failing before production.

Customer feeds often become a significant bottleneck in this process. Each customer delivers data in different formats, ranging from APIs to flat CSV files, which requires custom onboarding for each source. The solution lies in shifting away from fragile point-to-point connections to standardizing raw customer feeds into governed, reusable data products, so that downstream systems see a consistent internal structure.

This article explores why direct integration fails and how data platforms like Nexla enable scalable reuse through Nexsets and Common Data Models.

Why Direct Customer API/CSV Integrations Don’t Scale

Raw feeds stay raw because most data “integration” delivers rows, not usable meaning. The data lands, but the operational context required to reuse it does not.

Engineering teams often struggle because customer inputs are incredibly heterogeneous. You might receive data via REST APIs or webhooks from one client. At the same time, another sends Excel exports via SFTP or simple file drops. This lack of uniformity forces engineers to build bespoke connectors for every new customer.

This approach is inherently fragile because of schema drift. External customers may change their data structures without warning. A new field might appear, or a column might be renamed. Even a simple change in data type can break pipelines and ruin dashboards instantly. The result is a system that is always on the verge of breaking.

Direct integrations also lead to massive duplication. Core entities, such as orders and customers, are remapped individually for every single customer feed. Engineers repeatedly write the same transformation logic.

The business impact of this approach is severe. Onboarding new customers slows to a crawl, and maintenance costs increase significantly. Engineering teams end up spending their time on reactive firefighting rather than building valuable features.

What Are Standardized, Reusable Data Products?

Standardized, reusable data products are one answer to this chaos. These are defined as named, documented representations of business entities, explicitly designed for reuse across the organization. They treat data as an asset rather than a byproduct of a pipeline.

Standardization is the core of this concept. It involves establishing canonical fields and consistent naming conventions. It ensures that units, currencies, and time zones are consistent across sources. It also enforces consistent IDs and validations, ensuring the data is trustworthy by the time it reaches a downstream user.

Teams often achieve this through a Common Data Model (CDM). A CDM serves as a standard model for entities such as orders and events, and decouples sources from consumers. The source data may vary by customer, but it is mapped to a single standard structure during ingestion.

The outcome is powerful because one product powers multiple consumers. You can feed business intelligence tools and AI models from the same standardized asset without rebuilding integrations for each destination.

What a Data Platform Must Do

A modern data platform must handle the entire lifecycle of these data products to be effective. It needs to reliably ingest data by connecting to customer APIs and files. This ingestion must support both batch processing and near-real-time streams to accommodate different customer capabilities.

The platform must then standardize these inputs. It needs the intelligence to automatically map varying source schemas into a canonical model of key entities, with minimal human intervention. This transformation step is what turns raw chaos into usable assets.

Governance is equally critical. The platform must apply validation and access control to manage change over time. It should track lineage so you know exactly where the data came from, and it should provide data governance and auditability to support compliance.

Finally, the platform must publish and deliver these products. It creates a marketplace where data products are discoverable and reusable. It serves these products to many destinations, including data warehouses and operational apps, without requiring new pipelines for every request.

How Nexla Implements This

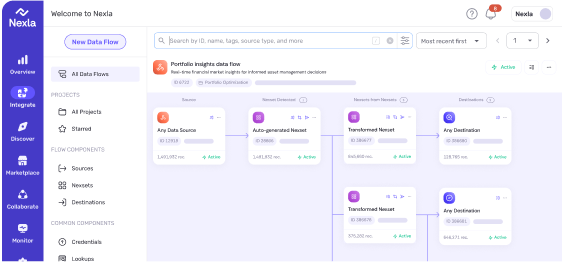

Nexla solves the challenge of scaling customer feeds by using Nexsets, logical data products with schema validation and access control built directly into their metadata. They act as the standardized container for your data as it moves through the platform.

The system allows you to define the internal contract once via a Common Data Model. You simply map each new customer feed into this CDM as it is onboarded. Nexla handles the complexity of ingestion and ensures the output meets your internal standards.

This architecture powers Nexla Flows for delivery. Once the data is in a Nexset, it can be sent anywhere. You can set up reusable flow and governed delivery to warehouses or AI pipelines with a few clicks. The data is already standardized, so the destination systems always receive clean and predictable inputs.

Reuse is the ultimate benefit of this approach. You publish standardized products once, and multiple teams consume the same governed version. This eliminates the bottleneck of custom engineering work for every new data request.

Next Step

Custom integrations rarely survive the pressure of enterprise growth. They require excessive maintenance and break too often to be sustainable. The only way to scale effectively is by adopting standardized data products. This strategy uses a Common Data Model to absorb upstream chaos and protect your downstream systems.

Nexla operationalizes this approach by combining Nexsets with automated standardization, transforming the challenging customer onboarding process into a streamlined, repeatable workflow. You can finally stop fixing broken pipelines and start building assets that last.

Ready to Stop Building Custom Integrations for Every Customer?

Request a demo to see how Nexla standardizes customer API and CSV feeds into governed data products with Common Data Models—turning weeks of custom onboarding into repeatable, scalable workflows.

FAQs

Why don’t direct customer API integrations scale?

Direct integrations require custom connectors for each customer’s unique format (APIs, CSVs, webhooks). Schema drift from external changes breaks pipelines without warning. Core entities like orders get remapped repeatedly, creating maintenance overhead that consumes engineering time on reactive fixes.

What is a Common Data Model for customer feeds?

A Common Data Model (CDM) defines standard schemas for business entities like orders, customers, and events. Customer feeds with varying formats map to this single canonical structure during ingestion, decoupling sources from consumers. Downstream systems receive consistent data regardless of original format.

What is metadata-intelligent integration?

Metadata-intelligent integration continuously discovers, infers, and maintains definitions, quality expectations, and traceability as feeds change. Schema, semantics, validation logic, and lineage travel with the data automatically, preventing the “data moves but meaning doesn’t” problem.

How do reusable data products reduce onboarding time?

Reusable data products eliminate custom engineering per customer. Define the standard once via CDM, then map new customer feeds to existing entities during onboarding. The same standardized product serves multiple consumers (BI, AI, warehouses) without rebuilding pipelines for each destination.

What is a Nexset and how does it standardize customer data?

A Nexset is Nexla’s logical data product with built-in schema validation, access controls, and governance metadata. Customer feeds map to Nexsets through Common Data Models, creating standardized containers. Once in Nexset format, data delivers to any destination with consistent structure and quality guarantees.

How does data product standardization prevent AI project failures?

Standardized data products provide consistent, validated context AI models require. Without standardization, schema drift, inconsistent formats, and ungoverned customer data create unreliable training inputs—contributing to the 70% AI project failure rate. Data products ensure AI pipelines receive trustworthy, predictable data.

How do you handle schema changes from external customers?

Data platforms with active metadata monitoring detect schema changes automatically, comparing incoming structures against the standard model. Non-breaking changes extend the model; breaking changes trigger versioning and notifications before impacting consumers.