From Hallucinations to Trust: Context Engineering for Enterprise AI

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

Poor data quality costs organizations an average of 15-25% of their operating budget, with some companies reporting losses of up to $15 million annually. As organizations increasingly turn to artificial intelligence (AI), particularly large language models (LLMs), to scale their operations, the risks of poor data quality are magnified.

LLMs pose unique challenges around data quality, privacy, and compliance, including risks of hallucinations, data leakage, and compliance violations. These challenges make robust data governance critical for a responsible AI deployment.

Unlike traditional machine learning (ML) models, LLM pipelines cannot rely on basic validation. They require additional considerations around data freshness, schema consistency, and governance. This makes the systematic validation critical to preventing model failures, accuracy issues, and operational problems in production environments.

This comprehensive checklist provides ML teams with 10 essential validation steps to ensure that their data supports reliable LLM deployment every time.

As more industries like finance, healthcare, and logistics start using AI for automation and better insights, data quality has become a key driver of success. Building successful LLM systems requires clean, reliable data and strong coordination amongst the stakeholders.

The validation checklist below will help your ML teams fix some specific data issues that can degrade the performance of your LLM model.

Keeping your data up-to-date is crucial for reliable LLM outputs. Stale and fragmented data can make even the best AI models produce irrelevant results or behave unpredictably. Ensure regular updates for all the upstream data sources and continuously monitor data patterns to prevent data drift, which can gradually reduce model accuracy.

Schema inconsistencies can break the entire LLM pipeline and cause processing errors. Maintaining clear and consistent data structures across all the data sources is crucial for seamless data ingestion, feature engineering, and model inference pipelines. These measures can also prevent costly downtime.

Reliable predictions rely heavily on high-quality and complete data. Inconsistent or missing entries can damage the trust and accuracy of the models. Therefore, always run checks regularly and update the model accordingly.

Traceability is essential for compliance and troubleshooting. Understanding exactly where your data comes from and how it has been changed makes debugging tasks much simpler and helps with error investigation. Data lineage becomes critical when the LLM outputs need explanation or audit trails.

Well-managed labels help with improving training results and yield more reliable supervised ML models. Poor labeling produces biased or inaccurate model responses. Track which data segments receive adequate annotation coverage and identify potential biases in labeling patterns.

The statistical structure of your features can significantly impact how the LLM learns and responds. Watch out for problems with cardinality, balance, and data splits. High cardinality features and skewed distributions can cause training problems.

Uneven or biased training data can make LLMs show systematic biases, perform poorly for some user groups, or fail on tasks not well represented in training. Biases and edge cases need special attention, as they can cause failures for certain user groups or scenarios.

Comprehensive documentation makes it easier for teams to use, understand, and maintain data assets effectively. It also protects against misuse and speeds up onboarding for new team members.

Data governance and regulatory adherence are non-negotiable when handling any sensitive or regulated datasets. LLMs often process personal information, making privacy and security controls essential. These strong controls ensure the LLM applications remain more compliant with regulatory standards.

Reproducibility and versioning your data are both essential steps for debugging, retraining, and scaling the LLM projects. You need clear records for every version used and processed to maintain experimental integrity and operational reliability.

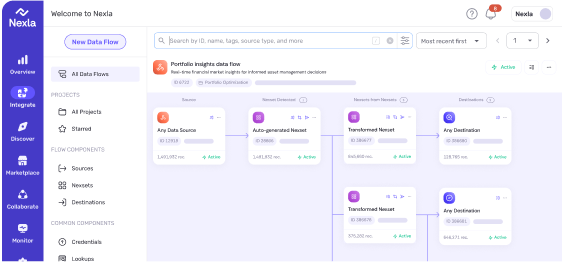

Nexla’s data integration platform enables ML teams to leverage AI-powered capabilities and simplify every validation requirement on this checklist. This eliminates the need for manually running validation checks for businesses.

Reliable LLM deployment starts with systematic, rigorous data validation across all ten critical areas outlined in this checklist. Manual validation approaches can not keep pace with the volume and complexity of the latest AI data pipelines.

Teams that invest in automation, strong governance, and continuous monitoring achieve faster iteration cycles, fewer downstream issues, and better compliance. Upfront validation not only reduces risk but also strengthens the long-term scalability and performance of AI systems.

Explore how Nexla’s AI data integration platform can help your ML teams build trusted, AI-ready pipelines for LLM development.

References

Schedule a custom demo today or read our AI Readiness guide to explore the key factors contributing to AI readiness and the best practices for accelerating the AI journey.

AI-ready data is validated, governed data that meets 10 critical requirements including freshness, schema consistency, lineage, and privacy compliance. It matters because poor data quality costs organizations 15-25% of operating budgets and causes LLM hallucinations, data leakage, and compliance violations in production.

The most critical steps are: (1) data freshness monitoring to prevent stale inputs, (2) schema consistency across training and inference, (3) provenance tracking for audit trails, (4) privacy governance for regulatory compliance, and (5) data versioning for reproducibility and debugging.

Stale data causes LLMs to produce irrelevant or outdated responses. Fresh data requires automated timestamp validation, staleness-detection alerts, and monitoring for data drift patterns that gradually reduce model accuracy, especially critical for real-time inference applications.

Data lineage provides complete traceability from source to model output, enabling teams to debug issues in minutes, meet audit requirements, and explain AI decisions. Without lineage, LLM systems become unexplainable black boxes unsuitable for regulated industries or high-stakes applications.

LLMs require additional validation beyond traditional ML: monitoring for data freshness and drift, schema consistency across training and inference, comprehensive lineage for explainability, privacy controls for sensitive text data, and validation of unstructured content like documents and annotations.

Building without lineage is technically possible but operationally risky. You lose debugging capability, audit trails, and explainability—making the system unsuitable for regulated industries and impossible to troubleshoot when models fail or produce incorrect outputs.

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

AI is shifting data engineering from code-heavy ETL to prompt-driven pipelines. Explore where LLMs fit, common pitfalls, and how Nexla makes AI-ready data workflows practical.

A research-backed framework for evaluating LLM-generated data transformations. Learn how datasets, sandboxed execution, and automated judging reveal failure patterns and model performance across real-world data engineering tasks.