From Hallucinations to Trust: Context Engineering for Enterprise AI

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

GenAI adoption is rising, but many organizations still struggle to move beyond pilots and ensure accurate responses.

Agentic RAG mitigates this issue by grounding an LLM in enterprise knowledge to plan and execute workflows. Yet, an agent is only as good as what it retrieves at decision time. If data is outdated, fragmented, or inconsistent, the system can act confidently while choosing the wrong context and workflow.

The problem worsens with enterprise knowledge, which is spread across APIs, databases, SaaS tools, documents, and files. It makes consistent context assembly more difficult. To address this, the most repeatable solution is to standardize enterprise knowledge into governed, reusable, AI-ready data products, so that agentic RAG systems can pull consistent, trustworthy context.

In this article, we will learn why agentic RAG systems don’t scale and how AI-ready data products improve retrieval quality, governance, and reuse across LLM apps.

Most agentic RAG implementations start with retrieval, then add planning and tool use on top. They still connect sources, extract text, chunk it, embed it, and load it into a vector database. It’s fast for a demo, but agentic systems raise the stakes because retrieval drives planning and tool use, not just answers.

Agentic RAG breaks first during planning. An agent must decide what to retrieve, which tool to call, and what step comes next. If the retrieved context is incomplete, stale, or missing key identifiers, the agent can route the task to the wrong tool or workflow. In agentic RAG, that is not just a bad answer. It is an executed decision.

The second failure mode is compounding context. Agentic RAG is iterative, so early retrieval influences later steps. When context is wrong or ambiguous, the agent carries it forward and reinforces it with follow-up retrieval and tool outputs. If updates are not reflected quickly or retrieval boundaries are misaligned, small mistakes turn into persistent, multi-step failures.

Autonomy introduces operational risk. Agents retry retrievals, repeatedly call tools, and branch into sub-tasks when confidence is low. Without clear freshness signals and stop conditions, loops increase latency and create unpredictable token and tool costs. The system may still “work,” but the operating model becomes unstable.

Moreover, there’s also a governance mismatch. Access rules in the source system don’t automatically carry into the index or the tool layer. Without consistent controls, agents can over-retrieve, under-retrieve, or take actions they should not be allowed to take. That creates compliance risk, audit gaps, and cost risk without AI-ready data and controls.

| Aspect | Standard RAG | Agentic RAG |

|---|---|---|

| Retrieval Purpose | Answer generation | Planning + tool execution |

| Context Impact | Bad answer | Wrong action taken |

| Error Compounding | Single-step failure | Multi-step reinforcement |

| Freshness Needs | Tolerates stale data | Requires live/near-real-time data |

| Governance Scope | Index access only | Index + tool + action controls |

| Operational Risk | Response quality | Autonomous execution loops |

AI-ready data products are named, documented, and governed representations of enterprise knowledge built for reuse in AI systems. They treat knowledge as a product with a clear contract, so structure stays stable, meaning stays consistent, and updates stay predictable.

AI readiness comes from standardization plus retrieval context. Standardization means canonical fields, consistent naming, stable IDs, and normalized units or time zones so the same concept looks the same across sources. Retrieval context comes from metadata such as ownership, effective dates, freshness timestamps, sensitivity tags, and domain labels. It enables retrieval to select the appropriate version and avoid restricted content.

AI-ready data products also include guardrails that prevent weak inputs from reaching the model. Validations catch missing fields, failed checks, or policy violations before indexing. Chunking follows meaning, so policies align to sections and effective dates, tickets align to timelines and resolutions, and docs align to product versions or SKUs.

A Common Data Model CDM makes this reusable at scale, so multiple agentic RAG and LLM apps can rely on the same governed product without rebuilding mapping, chunking, and refresh rules.

Achieving AI-ready data requires a platform built for AI consumption, not just data movement. The platform must:

When these capabilities are integrated into a single platform, organizations can finally move from fragile experiments to scalable, production-grade AI systems.

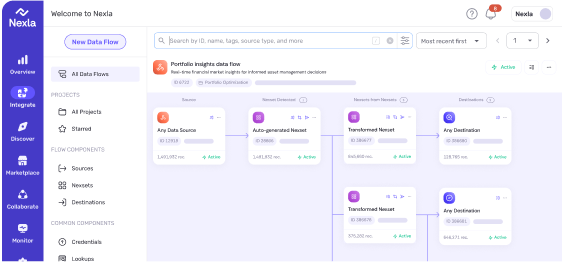

Nexla operationalizes this approach by treating AI-ready data as first-class products rather than just pipeline outputs. It begins with Nexsets, which package data along with schemas and controls. This ensures that downstream consumers see a consistent shape, regardless of source format or protocol.

Next, the internal contract is enforced through the Common Data Model. It defines the canonical representation once, then maps enterprise sources into it.

Policies, tickets, and product entities share consistent semantics, improving retrieval relevance and reducing duplication.

Nexsets also support production requirements. They provide validation to keep low-quality content out of retrieval, versioning to manage changes safely, and lineage or auditability so answers can be traced back to the exact source and transformation path.

Finally, governed delivery is driven by data flows, ensuring the same standardized product can be routed to vector stores, LLM pipelines, warehouses, and applications. Controls travel with the product, enabling least privilege access while still retrieving the best available context for authorized users. Publish once, and multiple teams and agentic RAG apps consume the same governed version.

Pipeline first retrieval is a fast start, but it’s a fragile way to scale. Reliable agentic RAG depends on AI-ready data with standardized structure, retrieval-grade metadata, enforced governance, and reusable delivery.

Schedule a demo to see how enterprise data can be transformed into reusable, AI-ready Nexsets for production-grade agentic RAG and LLM applications.

Agentic RAG extends standard retrieval-augmented generation by adding autonomous planning and tool use. The LLM doesn’t just retrieve and answer—it decides what to retrieve, which tools to call, and what steps to execute. This makes data quality critical since retrieval drives decisions, not just responses.

Agentic RAG fails when retrieved context is incomplete, stale, or missing identifiers, causing agents to route tasks to wrong tools or workflows. Compounding context errors across iterative steps, operational loops from unclear freshness signals, and governance mismatches create compliance risks and unstable costs.

AI-ready data products are governed representations of enterprise knowledge with standardized schemas, consistent naming, stable IDs, and retrieval metadata (ownership, freshness, sensitivity tags). They include validations that prevent weak inputs and semantic chunking aligned to meaning—enabling reliable RAG at scale.

A Common Data Model (CDM) normalizes enterprise sources into canonical entities with consistent fields and terminology. This prevents retrieval from mixing incompatible contexts, reduces duplication across sources, and enables multiple RAG applications to reuse the same governed product without rebuilding mapping logic.

Essential capabilities include: (1) reliable ingestion from structured and unstructured sources, (2) standardization with retrieval-grade metadata, (3) active governance with validation and access controls, and (4) reusable delivery to vector databases, indexes, and warehouses without per-destination custom logic.

Agent-ready data products, Nexla calls Nexsets, package data with schemas, validation, versioning, and lineage controls. They enforce Common Data Model standards, prevent low-quality content from reaching retrieval, and deliver governed products to multiple destinations, enabling teams to publish once and reuse across RAG applications.

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

Essential checklist for validating AI-ready data before building LLM pipelines. Learn the 10 critical steps ML teams must follow to ensure quality, freshness, and compliance.

Raw feeds without context create endless rework. This metadata-first blueprint shows how to turn changing source feeds into governed, reusable data products with automated validation, lineage, and GenAI-ready contracts.