The Future of Business is Interconnected

In a world where businesses depend on each other more than ever, making intercompany data flow as seamlessly as internal data is a core necessity. Today’s hyper-connected ecosystem means that data is no longer confined within the boundaries of a single organization. Enterprises increasingly rely on intercompany data – information exchanged between partners, suppliers, customers, and other external entities—to fuel smarter decisions, improve collaboration, and unlock new business opportunities.

But with this opportunity comes complexity. To stay competitive, enterprises must learn to manage data flow across partners, suppliers, and customers with the same speed and rigor as internal data. The companies that master this capability will gain competitive advantage and accelerate revenue.

What Is Intercompany Data?

Intercompany data refers to information shared across organizational boundaries—not just simple file transfers or APIs, but the backbone of collaborative digital ecosystems. This type of data exchange is foundational to the digital supply chain, B2B commerce, and modern platform ecosystems.

Common examples across industries include:

- Retail & E-commerce: Product catalog, inventory level, pricing data, and order status data flowing across suppliers, retailers, and marketplaces

- Insurance: Claims data, underwriting information, and policy details shared between insurers, reinsurers, brokers, and third-party administrators

- Asset Management: Portfolio positions, holdings data, and market information exchanged across fund managers, custodians, and data providers

- Travel & Hospitality: Hotel availability, flight schedules, weather data, maps, and landmark information shared across travel agencies, booking platforms, and service providers

- Digital Advertising: Campaign performance metrics, audience segments, spend data, and ROI analytics flowing between demand-side platforms (DSPs), publishers, and agencies

- Manufacturing & IoT: Product data from suppliers, usage and diagnostics data from IoT partners, order and fulfillment data between manufacturers and logistics providers

- B2B Marketplaces: Pricing and inventory data, compliance documentation, and vendor ecosystem information shared across marketplace platforms and sellers.

Why Intercompany Data Matters

Effective intercompany data flows unlock significant business value across multiple dimensions:

- Operational Efficiency: Real-time data sharing reduces manual processes, eliminates errors, and accelerates decision-making cycles. When inventory levels update automatically across supply chain partners, stockouts and overstock situations become preventable rather than reactive.

- Stronger Partnerships: Transparent data flows build trust and enable deeper collaboration. Partners can make better decisions when they have visibility into each other’s operations, leading to more strategic and mutually beneficial relationships.

- Enhanced Customer Experience: Personalized, consistent experiences rely on having a complete view of customer data across touchpoints. When companies share relevant customer insights appropriately, they can deliver seamless experiences together.

- Data-driven Growth: External data provides crucial market context and trend insights that internal data alone cannot offer. Companies can identify new opportunities, optimize pricing strategies, and predict market shifts more accurately.

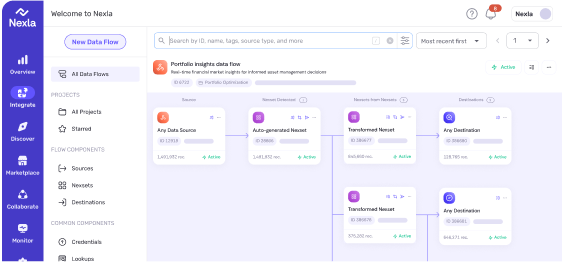

Yet despite these clear benefits, many organizations struggle to make intercompany data flows reliable, secure, and scalable. At Nexla, we have been dedicated to solving this challenge, serving as a sophisticated intercompany data platform enabling enterprises across sectors, including e-commerce, delivery, asset management, healthcare, and travel.

Top Challenges in Managing Intercompany Data

While it’s very important to keep data consistent across companies, especially when customer satisfaction and profits are impacted by data inconsistency, it’s not easy. Keeping your data consistent inside your own company is hard enough. Adding X suppliers and Y customers, each with their own data models, formats, protocols, and data speeds makes the data variety problem X x Y harder!

1. Data Format and Schema Inconsistencies

Every organization has evolved its own data standards, formats, and API structures. One partner might send product information as JSON via REST APIs, while another uses XML through SOAP, and a third relies on CSV files via SFTP. Normalizing this disparate data is time-consuming, error-prone, and requires constant maintenance as schemas evolve.

2. API and Other Protocol Inconsistencies

While we consider the incoming data variety challenge for the receiver, it is no easier for the data sender. Often data receivers will create an API expecting senders to simply integrate to it. But such integrations for senders aren’t easy. Where would they find the cycles in their product team, the expertise, and prioritization to build such an API integration. Now if this sender has to send data to multiple partners, this becomes a sizable challenge. They have to send different slices of data to different partners and in different formats. As files to some and via API to others.

3. Security, Privacy, and Compliance

Sharing data outside the corporate firewall raises significant concerns around access control, encryption, data residency, and regulatory compliance. Organizations must navigate complex requirements like GDPR, HIPAA, SOX, and industry-specific regulations while ensuring that sensitive data remains protected throughout its journey across organizational boundaries.

4. Data Freshness and Synchronization

Latency in updates or asynchronous delivery can lead to outdated insights and poor user experiences. When inventory data is hours or days behind reality, customers face stockouts, partners make decisions on stale information, and opportunities are missed. Managing real-time synchronization across multiple partners with different technical capabilities becomes exponentially complex.

5. Lack of Observability and Monitoring

Traditional integration approaches make it difficult to track data lineage, monitor quality, and troubleshoot issues. Questions like “Where did this data come from?”, “Who modified it?”, “Was it successfully delivered?”, and “Is the transformation working correctly?” become nearly impossible to answer without significant manual investigation.

6. Scalability Across Multiple Partners

Supporting data exchange with one or two partners might be manageable through custom integrations, but scaling to dozens or hundreds of partners breaks traditional approaches. Each new partner potentially requires custom development, testing, and ongoing maintenance, creating an unsustainable burden on IT teams.

The Solution: Data Products and Modern Integration Platforms

The key to simplifying intercompany data lies in treating shared data as data products—reusable, governed units of data that simplify access, documentation, security, and usage across organizational boundaries. This approach decouples data producers from consumers and dramatically reduces integration complexity.

Modern data platforms such as Nexla solve intercompany challenges through several key capabilities:

Universal Connectivity

Rather than building custom integrations for each partner, modern platforms offer out-of-the-box support for hundreds of systems across APIs, databases, files, and SaaS applications. Whether partners use EDI, SFTP, REST APIs, or proprietary formats, universal connectors enable seamless data exchange without custom development.

Automated Data Normalization via a Common Data Model

Advanced platforms automatically detect schemas, infer metadata, and transform data into unified common model or schema using low-code/no-code tools. This eliminates the manual effort of mapping across different incoming data models and ensures consistency across all partner interactions. Once incoming data is transformed into a common data model, it is ready for consumption.

Data Productization

By treating each shared dataset as a governed data product, organizations can easily track, version, and reuse data across company boundaries. This approach provides clear ownership, documentation, and access patterns that scale efficiently.

Bi-directional simplicity

Bi-directional simplicity means ease for the receiver when it comes to receiving a variety of data from senders, while at the same time ease for senders in sending out data to various partners for the sender. With a Data Product approach it becomes simpler when Data Products are easy to build and also they are polyglot, meaning they can deliver data in different formats to various target systems.

Robust Governance and Security

Enterprise-grade platforms provide granular access controls, data masking capabilities, end-to-end encryption, comprehensive audit trails, and compliance certifications. These features make it safe to collaborate across organizations.

Real-time and Batch Processing

Supporting both streaming and scheduled data pipelines ensures information is always fresh and timely—whether updates need to happen every second or once per day. This flexibility accommodates partners with different technical capabilities and business requirements.

End-to-end Observability

Comprehensive monitoring and alerting capabilities track every data flow from source to destination, enabling teams to catch and resolve issues before they become business problems. Data lineage, quality metrics, and performance monitoring provide full visibility into inter-company data operations.

Getting Started

Intercompany data represents one of the largest untapped opportunities for business growth and operational efficiency. Enterprises that master the art and science of cross-company data collaboration will build stronger partnerships, deliver better customer experiences, and create sustainable competitive advantages – all contributing to revenue acceleration.

The key is moving beyond ad-hoc integrations and custom solutions toward platforms that treat inter-company data as a strategic asset requiring the same rigor and governance as internal data. By embracing data products, universal connectivity, and comprehensive observability, companies can transform inter-company data from a technical challenge into a business accelerator.

The question isn’t whether your enterprise will need to excel at intercompany data—it’s how quickly you can build the capabilities to harness its full potential.