But the debate is missing a more fundamental question: what do captured decision traces actually enable?

Context graphs will make AI dramatically better at reasoning. But they won’t teach it judgment. And in business, asymmetric value comes from judgment, not reasoning. We’ve seen this movie before with self-driving. Despite comprehensive decision traces and massive amounts of data, it has been incredibly hard to bridge the gap from reasoning to judgment.

Let’s not make the mistake of assuming that more context will solve judgment problems with reasoning.

What Are Context Graphs?

A context graph is more than a log of data. It’s a record of the reasoning behind decisions. When a renewal agent proposes a 20% discount despite a 10% policy cap, the context graph captures not just the discount, but the approval chain, the incident history from PagerDuty, the escalation threads from Zendesk, and the precedent from prior exceptions.

The thesis: if we capture these decision traces comprehensively, AI agents will learn to navigate enterprise decision-making the same way they learned to write code and generate content.

There’s just one problem.

The Critical Distinction: Reasoning vs. Judgment

The context graphs movement rests on a powerful parallel:

What happened with LLMs and knowledge:

- We fed LLMs massive amounts of human knowledge (books, articles, code)

- They learned patterns in how humans generate content

- Result: Remarkably good at generating coherent text and functional code

The implicit assumption about context graphs:

- Feed LLMs massive amounts of decision traces

- They’ll learn patterns in how humans reason through decisions

- Result: Remarkably good at making decisions themselves

This assumes business decisions are primarily reasoning problems. They’re not. They’re judgment problems.

Reasoning problems are deterministic given sufficient context. Calculate optimal inventory levels, route support tickets, generate sales forecasts, and approve expenses within policy. Given complete information, there’s a “correct” answer. Context graphs will genuinely help here.

Judgment problems involve weighing incommensurable values under uncertainty. The same inputs can demand opposite decisions depending on latent intent, unobservable state, and relationship dynamics that exist outside any system.

You can’t feed reasoning traces to solve judgment problems. It’s a category error.

The Self-Driving Parallel

Autonomous driving is the perfect example for this, with over:

- 100+ years of codified rules

- Billions of training miles

- Comprehensive sensor data

- Massive investment from world-class engineers

Yet full autonomy has taken so much effort, yet proven hard because driving is as much a judgment problem as rules and reasoning. Business decisions face the same challenges at greater complexity:

| Challenge |

Driving |

Business |

| Interpreting Intent |

Pedestrian at crosswalk: waiting for light or about to jaywalk? The same scene requires different responses. |

Customer requests discount: price shopping or genuinely constrained? The same email requires different responses. |

| Reading Unspoken Cues |

Blinker on: actually merging or forgot it three turns ago? Watch the wheels, not the signal. |

“I strongly suggest we make an exception”: suggestion, directive, or political cover? Message ≠ meaning. |

| Applying Unwritten Rules |

Zipper merge, courtesy wave, when to yield all vary by region and situation. This will not be found in any rulebook. |

Which issues need escalation? Which exceptions need paper trails? Official process will always differ from actual practice based on situations. |

| Asymmetric Risk |

Hesitating costs seconds; misjudging costs lives. Context determines acceptable risk. |

Losing a $10K deal costs $10K; damaging a relationship could cost millions. Automation can’t calculate this. |

These examples demonstrate that the same data point demands different actions depending on context outside any system.

This is why Arvind Jain is right: “You can’t capture the why, only the how.” Even comprehensive process traces miss the judgment layer.

Will AI eventually learn judgment?

Maybe, but judgment depends on counterfactuals that context graphs cannot capture. When a leader approves a “strategic” exception, the graph records the approval, not the similar decisions she declined, the precedents she avoided setting, or the organizational capital she chose not to spend. Judgment is defined by the roads not taken; context graphs only see the roads traveled. That is why trying to learn judgment from decision traces is fundamentally different from learning reasoning from data.

Context Graphs Are Necessary But Not Sufficient

Context graphs will make AI agents dramatically more capable at reasoning tasks. But decisions that create asymmetric value building companies, developing talent, and navigating crises will remain human for the foreseeable future.

Why? Because, we’re feeding reasoning traces to solve judgment problems.

The limitation isn’t capturing context. It’s the category error: better reasoning enables decision support, not decision replacement.

The opportunity isn’t replacing human judgment. It’s augmenting it.

What Infrastructure Builders Should Actually Build

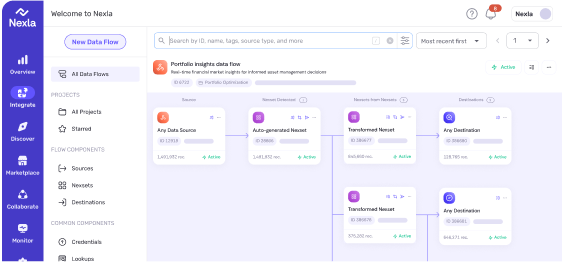

As CEO of Nexla and creator of Express.dev, I’m deeply invested in building the context engineering layer.

In addition to being improving reasoning, Context Graphs also unlock

- Governance through precedent. Surface how similar cases were handled. What was approved, rejected, and under what conditions, making human judgment faster and more consistent.

- Error detection before execution. Validate planned workflows against historical patterns to catch reasoning errors before they become expensive mistakes.

- Learning loops from overrides. When humans override agent decisions, those overrides become training data for systematically improving reasoning models.

The pattern: context graphs don’t replace judgment. They make reasoning scale, coordinate, and validate itself. That’s the real opportunity.

Here’s what we can do in practice:

- Build comprehensive context capture. The opportunity is real. Capture decision traces, approval chains, cross-system workflows. This is valuable infrastructure.

- Design override mechanisms from day one. Every automated decision needs a transparent “why did the agent choose this?” explanation and a low-friction human override path. Not as an afterthought, but within the core architecture.

- Focus on “decision-ready context” not “decision-making.” Surface the right precedents, flag similar past cases, highlight policy exceptions. Let agents prepare the decision and humans make the call.

- Create asymmetric escalation patterns. Automate low-stakes decisions fully. For high-stakes decisions, treat agents as research assistants. The human approval threshold should be inversely proportional to consequence magnitude.

- Build learning loops that capture human overrides. When humans override agent recommendations, that’s not a bug. It’s the most valuable training data. Your system should treat overrides as ground truth for where judgment differs from reasoning.

The companies that win will not replace human judgment. They will design systems that know exactly when to defer to it.