How is AI transforming data engineering? AI enables prompt-driven pipelines where data engineers use natural language instructions instead of writing complex ETL code. LLMs automate schema detection, data mapping, transformation generation, and quality checks—reducing manual work from 80% to minutes while making data engineering accessible to non-technical users.

Introduction

Traditional data engineering relies heavily on extract, transform, and load (ETL) workflows. As data volumes surge, these pipelines often become rigid, complex, and difficult to scale. Engineers can spend up to 80% of their time on data preparation tasks, cleaning, integrating, and validating datasets, which slows progress and drives up operational costs.

But things are starting to change with the rise of generative AI. Users can leverage large language models (LLMs) and AI agents to automate data curation. While the process requires less coding, crafting effective prompts remains a key challenge.

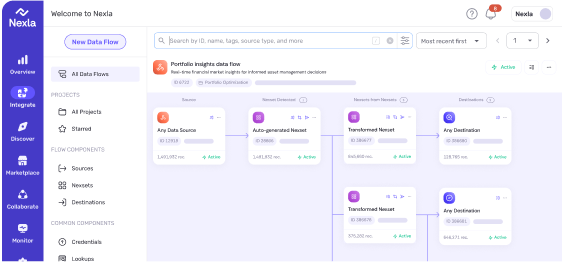

That’s where platforms like Nexla make a difference. They simplify the complexity behind the scenes, allowing teams to build scalable, AI-ready pipelines without writing code.

In this article, we’ll break down how prompts are changing the way data work happens, where AI fits into the data engineering process, the common pitfalls, and how Nexla helps teams solve them.

From Code to Prompts: What It Means

Prompt engineering is the process of writing effective instructions for a model so it consistently generates content that meets your requirements. These instructions or prompts directly affect the accuracy and precision of the output.

Such AI-based workflows help achieve:

- Faster development with outputs generated in seconds

- Reduced manual effort by eliminating the need to write complex logic

- Broader accessibility for non-technical users

- Improved consistency through reduced human error

AI-Powered Use Cases in Data Engineering

The role of AI in data engineering is still evolving, with its use cases varying across organizations. Below are a few examples of how it’s being used in practice:

Schema Detection

Engineers can prompt LLMs to describe data sources and identify relevant fields even in unstructured data. They can also generate recommendations for schema normalization or transformation strategies to improve compatibility and usability.

Data Mapping

Gen AI can help align data across systems by learning relationships between fields, columns, and datasets, even when schema names or formats differ. The method improves data discoverability across platforms and accelerates cross-system integration.

Transformation Generation

Instead of manually writing logic to clean or convert data, engineers can rely on AI to suggest transformation rules based on schema patterns, usage context, or past workflows. It can also enrich datasets by referencing external sources, adding more depth for analytics or reporting.

Data Lineage

Prompting can also have LLMs automatically pull out key details, spot missing links in data history, and keep catalogs up to date. This boosts traceability, helping teams piece together and clearly document entire data pipelines, making the flow of information more logical and easier to understand.

Data Quality and Anomaly Detection

Engineers can use LLMs to scan for anomalies, drifts, missing values, and outliers. The framework can proactively recommend fixes or flag issues that might otherwise go unnoticed, which prevents flawed insights and risky decisions.

Impact on Teams and Workflows

AI is changing not just the tools, but how data teams work and manage workflows. Here’s how:

- Increased Efficiency with Automation: Data engineers used to do repetitive tasks manually, such as code reviews, code generation, and warehouse optimizations. With the help of AI, they can now focus on the higher-level problem-solving tasks and data strategies.

- Increased Accessibility and Scalability: Data workflows have become easier to build and manage, even for those without deep technical skills. This reduces the need for specialized coding expertise and opens the door for more scalable and flexible solutions across teams.

- Shorter Time-to-Insights: Organizations have achieved business agility by using AI to extract insights from raw data more quickly. This has helped streamline data pipeline automation and reduce bottlenecks, enabling quicker, more informed decision-making.

- Cross-functional Collaboration: Data engineering now involves close coordination between engineers, analysts, and governance teams. Shared tools and documentation improve visibility, ensure compliance, and keep everyone aligned on shared goals.

- Smart Data Discovery and Cataloging: Most of the time of data engineers is spent on finding relevant datasets, but now AI has revolutionized this by intelligent tagging and organizing data. This makes the process faster, discoverable, and efficient.

Challenges in AI-Driven Data Engineering

While AI brings clear benefits in terms of ease, efficiency, and consistency, it still struggles to deliver on reliability, trust, and scalability. Several challenges continue to affect its performance in real-world data engineering environments:

- Data Governance Risks: Without proper role-based access control, it’s easy for the wrong people to make changes or access parts of the system they shouldn’t. This puts data integrity at risk. In some cases, changes made by mistake can slip past validation steps, leading to serious downstream issues.

- Prompting Limitations with Complex Data Structures: Vanilla prompts might fall short when dealing with legacy systems, nested formats, and custom APIs. If a technically precise and carefully curated prompt is not provided, then AI might not be able to accurately represent relationships and understand the structures.

- Data Complexity and Variability: Organizations often deal with legacy systems, custom APIs, and datasets in all kinds of formats. AI tools can struggle to make sense of these, especially when they’re not standardized. That makes it hard to build reliable pipelines or trust the output.

- Lack of Contextual Understanding: Unlike humans, AI doesn’t naturally understand context. It may not see how datasets are related, or how one field’s meaning changes depending on the source. Without metadata or lineage info, it can misinterpret data and apply the wrong transformation.

- Poor Data Mapping Accuracy: Matching up columns or fields between systems might seem simple, but inconsistent naming or structure can throw AI off. If the mapping is incorrect, the entire transformation process can fall apart, leading to missing data or inaccurate results.

- Prompt Engineering Skill Gap: Even though a prompt is given in natural language, a non-data engineer might not be able to curate a precise and well-crafted prompt due to a lack of data engineering knowledge. Eventually, this can result in inconsistent and inaccurate results.

Nexla’s Role: Simplifying AI-Driven Data Engineering

Nexla puts the “from code to prompts” approach into practice with a no-code platform. It detects schemas, suggests mappings, and generates transformations from plain-language instructions or simple configurations.

- Governance Automation: Nexla provides features such as role-based access controls and validation layers. This helps achieve faster pipeline creation and less manual oversight by removing concerns about data manipulation and unauthorized access.

- Seamless Connectivity: Its seamless connectivity with legacy systems, APIs, and diverse data formats(CSV, JSON, XML, etc.) allows teams to unify data from disparate sources without custom integrations or brittle connectors.

- Context-Aware Automation: Nexla uses metadata and lineage to generate accurate mappings and transformations. This helps capture the true essence of data and enhance reliability when constructing pipelines.

- Inclusive Design: Nexla’s approach encourages non-technical roles, such as analysts, product managers, and governance leads, to take on technical tasks and data operations without coding skills. This promotes cross-functional collaboration, accessibility, and timely problem resolution.

Conclusion

Shifting from code to prompts streamlines processes, boosts consistency, and opens participation beyond specialists. This transition accelerates insight extraction and fosters more efficient collaboration.

But certain issues like governance risks, data complexity, and skill gaps hinder the progress of this shift. However, if these challenges are resolved, then AI-ready, innovative, and scalable pipelines can be built.

Data engineering is entering a new era that is powered by prompts, built for everyone, and driven by intelligence, and with Nexla, it’s already here. Try Nexla today and experience the future of data engineering.

Ready to Experience the Future of Data Engineering?

Contact us today for a custom demo or try Express.dev, the conversational data engineering platform.

FAQs

What does “from code to prompts” mean in data engineering?

Instead of writing complex code for ETL workflows, teams can now use prompt engineering—crafting natural language instructions—to build data pipelines. AI models automatically generate transformations, mappings, and quality checks from plain English descriptions. This reduces development time from hours to seconds and makes data engineering accessible to non-technical users like analysts and business stakeholders.

What are the main challenges of using AI for data engineering?

Key challenges include data governance risks from improper access controls, prompt engineering skill gaps (crafting effective prompts requires data engineering knowledge), lack of contextual understanding without proper metadata, poor data mapping accuracy due to inconsistent naming, and limitations with complex legacy systems. Success requires platforms that automate governance, provide context-aware intelligence, and simplify prompt creation.

How can AI automate schema detection and data mapping?

AI uses prompt engineering to analyze data sources, identify fields in unstructured data, and recommend normalization strategies. For mapping, generative AI learns relationships between fields across systems even when schema names differ. However, accuracy depends on proper metadata and lineage tracking to capture the true meaning of data across diverse sources.

How much time do data engineers spend on data preparation?

Data engineers spend approximately 80% of their time on data preparation tasks like cleaning, integration, and validation, leaving only 20% for strategic work. AI-powered platforms automate these repetitive tasks, allowing teams to generate production-ready pipelines in minutes rather than weeks.

How does Nexla simplify AI-driven data engineering?

Nexla provides a no-code platform with automated governance, seamless connectivity to legacy systems and APIs, context-aware automation using metadata and lineage, and an inclusive design that enables non-technical users to build pipelines. It transforms natural language instructions into production-ready, AI-ready pipelines while maintaining enterprise-grade governance and scalability.