The Era of “Best-of-Breed” Just Officially Ended

The Fivetran-dbt merger marks more than just two companies combining forces. It’s the clearest signal yet that the “modern data stack” approach—the idea that you should assemble your data infrastructure from best-of-breed point solutions—has fundamentally failed.

For the past decade, the promise was compelling: choose the best connector platform, the best transformation tool, the best orchestrator, and the best BI layer. Mix, match, and customize to your heart’s content. Freedom! Flexibility! Innovation!

The reality? Tool sprawl became unmanageable. Integration nightmares multiplied. Costs became impossible to predict. And data teams spent more time maintaining their Rube Goldberg-esque infrastructure than actually delivering insights.

Now the market is correcting—hard. Microsoft has Fabric. Databricks has Lakeflow. Snowflake keeps expanding its capabilities. And Fivetran just merged with dbt in what one commentator called “scrambling to catch up” with the platform giants.

The modern data stack is dead. But what comes next? And how should data leaders navigate this transition?

The Rise: How the Modern Data Stack Won Our Hearts

To understand why it failed, we need to remember why it succeeded in the first place.

The Dark Ages: Legacy ETL

Before the modern data stack, enterprise data integration was dominated by monolithic, expensive platforms like Informatica, Talend, and IBM DataStage. These tools required:

- Months-long implementation projects

- Expensive consultants for every change

- Complex licensing models

- Deployment on-premises infrastructure

For a typical data integration project, you’d wait weeks for hardware provisioning, months for implementation, and pay six-figure license fees. Innovation moved at the speed of enterprise procurement.

The Cloud Revolution

The emergence of cloud data warehouses (Redshift, Snowflake, BigQuery) changed everything. Suddenly you could spin up petabyte-scale analytics infrastructure in minutes, not months.

But you still needed to get data into these warehouses. The modern data stack emerged to solve this problem:

Fivetran and Stitch offered push-button connectors. Point, click, and your Salesforce data starts flowing to Snowflake. No custom code required.

dbt revolutionized transformation. Instead of writing stored procedures, you could treat transformations like software development—version control, testing, documentation, and CI/CD.

Airflow and Dagster provided open-source orchestration that you could customize infinitely.

Looker and Mode modernized business intelligence with code-based approaches.

The Promise: Best-of-Breed Freedom

The pitch was irresistible:

- Freedom of choice: Swap out any component without rebuilding your entire stack

- Innovation velocity: Each vendor focused on doing one thing excellently

- Cost efficiency: Pay only for what you need, scale what you use

- Community-driven: Open source at the core, with passionate communities driving innovation

For a few years, it worked beautifully. Data teams felt empowered. Startup valuations soared. Conference talks celebrated the “composability” of the stack.

Fivetran raised money at a $5.6 billion valuation. dbt Labs hit $4.2 billion. The ecosystem was thriving.

The Fall: Where It All Went Wrong

But cracks started appearing. And they turned into chasms.

Tool Sprawl Became Unmanageable

What started as “best-of-breed” devolved into “death by a thousand tools.”

A typical modern data stack in 2024 might include:

- Ingestion: Fivetran, Airbyte, custom Python scripts

- Storage: Snowflake or Databricks

- Transformation: dbt, custom SQL, Python notebooks

- Orchestration: Airflow or Dagster

- Quality: Great Expectations, Monte Carlo, or Datafold

- Catalog: Alation or Collibra

- BI: Looker, Tableau, and Power BI (because different teams have preferences)

- Reverse ETL: Census or Hightouch

That’s 10+ vendors. Ten sets of contracts. Ten renewal cycles. Ten support organizations. Ten sets of monitoring and alerting to configure. Ten systems that need IAM integration.

The cognitive load on data teams became crushing.

Integration Complexity

The modern data stack promised that these tools would “just work together.” In practice, integration required constant manual effort:

- Authentication sprawl: Each tool needed credentials for every system

- Overlapping functionality: dbt can orchestrate some workflows, but so can Airflow—which one handles what?

- Metadata fragmentation: Lineage and cataloging lived in multiple systems with no single source of truth

- Version conflicts: Updates to one tool broke integrations with others

Data engineers spent more time being “glue code developers” than actually building data products.

Cost Unpredictability

Usage-based pricing sounds great in theory. But when you have eight different vendors all with different pricing models, forecasting budgets becomes impossible:

- Fivetran charges by Monthly Active Rows—but wait, they changed it to per-connector in 2025

- Snowflake charges by compute and storage—but queries you didn’t even know were running can spike your bill

- dbt Cloud added model run pricing in 2025

- Data quality tools charge by table or by test execution

- Reverse ETL charges by records synced

Finance teams started rejecting data platform investments because the costs were impossible to predict or control. One viral tweet showed a $60K monthly Snowflake bill for a mid-sized company—most of it from inefficient queries nobody knew were running.

The Maintenance Burden

Every tool required ongoing care and feeding:

- Monitoring and alerting configuration

- Incident response procedures

- Upgrade management (breaking changes were common)

- Security patching

- Performance tuning

- Cost optimization

What was supposed to be “low maintenance SaaS” turned into a full-time job for multiple people.

One data leader put it bluntly: “We have eight data engineers. Three of them spend 80% of their time just keeping the stack running. That’s not what I hired them for.”

The Platform Giants Moved Fast

While modern data stack companies fought over their narrow domains, Databricks and Snowflake systematically added capabilities:

Databricks added:

- Built-in ingestion (Auto Loader)

- Unity Catalog for governance

- SQL analytics alongside notebooks

- MLflow for ML lifecycle

- Lakeflow for orchestration

Snowflake added:

- Snowpipe for ingestion

- Streams and Tasks for transformation

- Built-in data sharing

- Snowpark for Python and Java

- Native applications marketplace

Suddenly, the “best-of-breed” tools were competing against “free” (bundled) alternatives from the platforms customers already used. And “good enough + free + integrated” started beating “best + expensive + requires integration.”

The Consolidation Wave: Market Correction in Real-Time

The market is now consolidating rapidly. The Fivetran-dbt merger is just one example:

Microsoft Fabric

- Microsoft’s comprehensive data platform integrates:

- Data engineering

- Data science

- Real-time analytics

- Business intelligence

- Data integration

All under one unified experience with shared governance, security, and costs.

Databricks’ Lakeflow

Recently announced, Lakeflow provides orchestration, data quality, and lineage natively within Databricks—eliminating the need for external orchestration tools.

Snowflake’s Expansion

Snowflake continues systematically building out capabilities to reduce dependencies on external tools:

- Snowflake Native Apps for third-party integrations

- Snowpipe Streaming for real-time ingestion

- Dynamic Tables for declarative transformations

- Snowpark Container Services for custom compute

The M&A Frenzy

Acquisitions are accelerating:

- Fivetran acquired HVR (database replication)

- Fivetran acquired Census (reverse ETL)

- Fivetran acquired Tobiko Data/SQLMesh (transformation)

- And now: Fivetran merging with dbt

As one industry observer noted: “The entire data industry is consolidating. Everyone’s building bigger, more integrated platforms. The modern data stack’s original promise of mix-and-match tools is dead.”

Three Paths Forward for Data Leaders

The modern data stack is dead. But you still need to make decisions about your data infrastructure. You have three paths:

Path 1: All-In on Platform Giants (Databricks or Snowflake)

The Approach: Consolidate everything onto Databricks or Snowflake and use their native capabilities for ingestion, transformation, orchestration, BI, and ML.

Pros:

- Simplicity: One vendor, one contract, one support team

- Integration: Everything works together by design

- Cost consolidation: One bill (though it might be large)

- Performance: Optimized for their platform

- Future-proofing: These companies are investing billions in R&D

Cons:

- Ultimate vendor lock-in: Your entire data infrastructure depends on one company

- Cost escalation: With no alternatives, you have limited negotiating leverage

- Pace of innovation: May not match specialized tools in specific areas

- Risk concentration: Platform outages impact everything

- Data portability: Migrating away becomes nearly impossible

Best For: Organizations that value simplicity over flexibility and have confidence in their chosen platform’s long-term direction.

Path 2: Merged Point Solutions (Fivetran-dbt and Similar)

The Approach: Use larger vendors that have consolidated multiple capabilities through M&A. Think Fivetran-dbt, or potentially future combinations.

Pros:

- Some integration: Better than completely separate tools

- Specialized expertise: These companies know their domains deeply

- Platform agnostic: Works with multiple data warehouses

- Familiar tools: Teams already know and love these products

Cons:

- Duct tape integration: Acquired products rarely integrate seamlessly

- Still missing pieces: Orchestration, governance, and other capabilities still require additional tools

- Pricing risk: History shows M&A leads to price increases

- Cultural conflicts: Acquired companies often lose their mojo

- Vendor risk: Concentrated into fewer, larger vendors

Best For: Organizations with significant investment in these specific tools who want gradual evolution rather than rip-and-replace.

Path 3: Purpose-Built Converged Platforms

The Approach: Choose platforms designed from day one to provide end-to-end integration capabilities in a unified architecture—but without the platform lock-in of Databricks or Snowflake.

Pros:

- True unification: Not bolted together after acquisition

- Complete functionality: Ingestion, transformation, orchestration, governance, and activation

- Vendor independence: Works with any warehouse, lake, or destination

- Transparent pricing: Tier-based models with predictable costs

- AI-native: Built-in support for GenAI, RAG, vector databases

- Data product architecture: Portable abstractions prevent lock-in

- Faster time-to-value: Purpose-built for speed and self-service

Cons:

- Migration required: Can’t just add to existing tools

- Learning curve: Teams need to learn new platform

- Market risk: Smaller vendors than platform giants

- Integration: May need connectors for niche sources

Best For: Organizations building or modernizing data infrastructure who want best-of-breed capabilities without best-of-breed complexity.

The AI Factor: Why Infrastructure Matters More Than Ever

The explosion of AI use cases makes the infrastructure question even more critical.

AI’s Unique Requirements

Generative AI and agentic systems demand:

Real-time, Fresh Data: AI agents need current information to make decisions. Batch pipelines that refresh nightly don’t cut it.

Structured and Unstructured: LLMs need access to documents, images, and other unstructured data—not just structured database tables.

Governed and Traceable: When AI makes decisions affecting customers or compliance, you need bulletproof lineage and governance.

Context-Rich Metadata: RAG systems need rich metadata to retrieve relevant context. Fragmented metadata across tools makes this nearly impossible.

Low Latency: AI applications can’t wait minutes for data pipelines. Sub-second response times are expected.

Why Modern Data Stack Fails AI

The tool sprawl of the modern data stack creates critical problems for AI:

Metadata Fragmentation: Lineage lives in dbt, catalog in Alation, quality in Monte Carlo, and usage in your BI tool. Stitching this together for AI context is a nightmare.

Unstructured Data Gaps: Most modern data stack tools focus on structured data from databases and SaaS apps. Processing documents, images, and video requires entirely separate pipelines.

No RAG Native Support: Retrieval-Augmented Generation requires vector databases, embedding generation, and semantic search. Adding these capabilities to your existing stack means… more tools.

Governance Gaps: When AI needs to explain its decisions, you need end-to-end lineage from raw source through all transformations to the vector database. With data spread across multiple tools, this is nearly impossible.

What AI-Native Infrastructure Looks Like

Platforms designed for the AI era provide:

- Unified structured and unstructured processing: Handle database records and PDF documents in the same pipeline

- Built-in vector database support: Generate embeddings, store in vector DBs, and keep them fresh automatically

- Agentic frameworks: Tools for building AI agents that can access and reason over your data

- Model evaluation and monitoring: Track AI model performance alongside data quality

- Continuous RAG: Automatically update vector indexes as source documents change

This isn’t something you can bolt onto a legacy modern data stack. It requires infrastructure designed with AI as a first-class citizen.

Making Your Decision: A Framework

Here’s how to evaluate which path is right for your organization:

Assess Your Current State

Tool Count Audit: List every tool in your data stack. If you have more than 5 vendors, you’re feeling the pain of the modern data stack.

Time Allocation Analysis: Where do your data engineers spend time? If more than 30% goes to “keeping the lights on,” you have a maintenance problem.

Cost Predictability: Can you forecast next quarter’s data platform costs within 10%? If not, you have a pricing model problem.

Change Velocity: How long does it take to onboard a new data source? If it’s weeks, you have an agility problem.

Define Your Requirements

Must-Haves:

- Real-time or batch?

- Structured only or structured + unstructured?

- What level of self-service for non-engineers?

- AI/ML use cases today and in 12 months?

- Compliance and governance requirements?

Deal-Breakers:

- Specific warehouse lock-in acceptable?

- Maximum vendor concentration risk?

- Budget constraints?

- Performance requirements?

Evaluate Your Options

For Platform Giants (Databricks/Snowflake):

- Do you have confidence in their long-term vision?

- Can you accept the vendor concentration risk?

- Is their pace of innovation sufficient for your needs?

For Merged Point Solutions (Fivetran-dbt):

- Are these specific tools critical to your team’s workflows?

- Can you manage the still-missing pieces (orchestration, etc.)?

- Are you prepared for potential pricing changes post-merger?

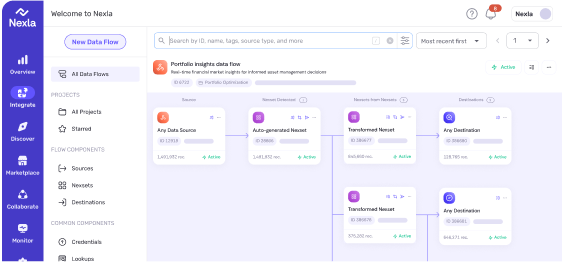

For Converged Platforms (Nexla, others):

- Does their unified architecture address your pain points?

- Is their vendor-independent approach valuable to you?

- Does their AI-native design align with your roadmap?

Calculate True Costs

Don’t just compare license fees. Include:

Direct Costs:

- Platform licenses

- Storage and compute

- Support tiers

- Training

Indirect Costs:

- FTE time on maintenance and integration

- Incident response and firefighting

- Duplicate efforts across teams

- Delayed projects due to infrastructure complexity

Opportunity Costs:

- Projects not started because infrastructure can’t support them

- Competitive disadvantage from slow time-to-insight

- Talent retention issues when engineers hate the tooling

One data leader calculated that their “free” Airflow deployment actually cost $400K/year when including the two engineers maintaining it.

Case Studies: What Works in Practice

Insurance Company: From 6 Months to 3 Days

A large insurer was onboarding new data partners (carriers, third-party administrators) using a traditional modern data stack: Fivetran for ingestion, dbt for transformation, custom Python for special cases.

The Problem: Each partner had unique data formats, delivery methods, and schemas. Onboarding took 6 months on average—mostly spent building custom mappings and transformations.

The Solution: Migrated to a converged platform with data product architecture. Partners’ data is automatically transformed into standardized Nexsets (data products) regardless of source format.

The Results:

- Partner onboarding: 6 months → 3-5 days (97% reduction)

- Automated 60-70% of manual integration tasks

- Improved claims processing efficiency by 30%

- Enabled AI copilot for natural-language queries

Retail Company: 10x Productivity Gain

An ecommerce company had the full modern data stack: Fivetran, dbt, Airflow, Snowflake, plus various data quality and cataloging tools.

The Problem: Data engineers spent 70% of their time on maintenance. New analytics use cases took weeks to implement. Business users couldn’t self-serve.

The Solution: Consolidated onto a purpose-built integration platform with self-service capabilities for business users.

The Results:

- 10x greater productivity (business users can build pipelines)

- 50% reduction in data engineering headcount needs

- Time-to-insight for new analytics: weeks → hours

- 40% reduction in platform costs

Financial Services: Breaking Free from Lock-In

A fintech company built entirely on Databricks but felt vulnerable to vendor lock-in and pricing pressure.

The Problem: Their entire data infrastructure, ML pipelines, and analytics depended on one vendor. Negotiating renewals became increasingly difficult as they had no credible alternatives.

The Solution: Implemented a converged integration platform with vendor-independent data products. Can now run workloads on Databricks, Snowflake, or Trino without rewriting pipelines.

The Results:

- 40% negotiated discount on next Databricks renewal (credible exit strategy)

- Workload portability across platforms

- Reduced migration risk

- Better architectural optionality for future decisions

The Bottom Line for Data Leaders

The modern data stack failed because it promised flexibility but delivered complexity. The market is consolidating, and you need a strategy.

The Fivetran-dbt merger is a symptom, not a solution. Two companies that built separate products are trying to compete with purpose-built platforms by stapling their offerings together. It might help them tell a better story to investors, but it doesn’t solve the fundamental problems that killed the modern data stack.

You have choices:

- Go all-in on a platform giant and accept the lock-in

- Stick with merged point solutions and manage the gaps

- Choose purpose-built integration platforms that provide unification without lock-in

The right choice depends on your specific context. But making no choice—just letting your current tools dictate your future—is the worst option of all.

The modern data stack is dead. What you build next will define your data capabilities for the next decade. Choose wisely.

Ready to Evaluate Your Options?

Contact us to systematically evaluate your current stack and provide strategic recommendations and a migration plan.