Success now hinges on how well businesses share data beyond their walls. Seamless intercompany data flow fuels smarter decisions, faster collaboration, and real growth. In this new era, connection is the key to competitiveness.

At AWS re:Invent 2023, GenAI took center stage in CEO Adam Selipsky’s keynote address. In order to harness the power of Generative AI, it’s crucial to navigate potential pitfalls and avoid the classic “garbage-in, garbage-out” scenario that often plagues AI models. A recent McKinsey study projects GenAI will add a staggering $2.6 trillion to $4.4 trillion in value to the economy. However, it also highlights that over 90% of data created in the future will be unstructured.

Traditional data integration patterns are ill-equipped for the unstructured nature of data required for GenAI. Typically this data ranges from videos and chats, to code and documents.

This underscores the unprecedented opportunities presented by GenAI, but also the need for meticulous preparation with systems, processes, and tools tailored for the unique task.

To ensure success adopting GenAI, focus on the following capabilities:

GenAI caters to a diverse array of use cases. Rather than adopting a vague approach, meticulously decide and plan for specific use cases tailored to your business. McKinsey’s AI use case mapping for banking serves as an excellent example, illustrating the vast range of applications and routes available across feasibility and impact.

Data is the fuel behind the GenAI machine, and not having the right tools will cost your organization time and money. After all, the landscape is evolving quickly, and the use cases could be different in just a few quarters. Evaluate data tools for flexibility, and ensure it has features to handle personally identifiable information (PII) for any use case with private or sensitive data. Most of the time, purchasing flexible tools that are made for the job will save time and money rather than attempting to build from scratch in-house. McKinsey offers an illustrative data architecture with some sample components to enable AI:

Within your data architecture, ensure components to implement the five key components of a data architecture built for AI:

Before undertaking GenAI initiatives, map out a data governance policy appropriate for your data and business. Ensure a plan exists to acquire tools to help track and search data lineage, and prioritize transparency in data flows and movement that feed into AI models to clearly see what data is being used and where. This will also aid in triaging hallucinations and model drift in AI when we can see what data is being used to train those models. A plan will also need to be in place to handle sensitive data and PII, with automatic PII detection and tools to hash and hide sensitive data along the way before allowing AI models and other stakeholders to view. Finally, metadata tagging and tracking will also factor into data governance, emphasizing the importance of being able to find and track the movement and lineage of all your data across a variety of flows.

At enterprise scale, data storage becomes a key decision that can cost millions of dollars with the wrong fit, or enable incredible discoverability, collaboration, and accessibility in the right fit. Data for GenAI models is rarely in the nice and neat rows and columns of relational databases, so data storage that fits with your specific data that can be efficiently called and accessed is crucial. For data storage, also think about timing – is it important for your AI use cases to update in real-time? In that case, what storage and data movement capabilities are suited to real-time updating of models? Across all data stores, metadata tagging and cataloging will aid greatly in mapping the data journey and so teams can find the data they need.

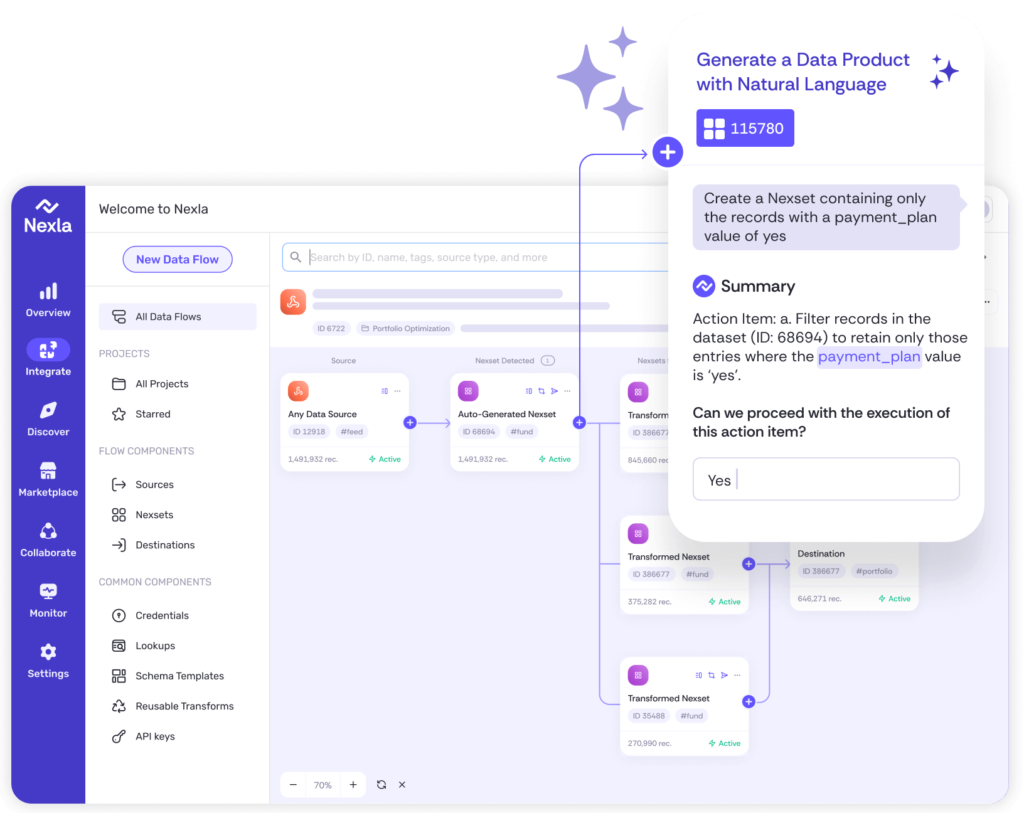

Plan and acquire tools and platforms to move all that data in-between your data storage, producers, and AI models efficiently and easily. With AI in particular, unstructured data will make things more complicated than traditional ETL/ELT platforms can handle. Besides the format issues of data for AI, the velocity of data will also play a part if there are any use cases that require freshness of data up to real-time. Select a data flow building platform carefully that is able to handle unstructured data, connect to all your data storage solutions and output LLMs or other models, and works with whatever speed the use case calls for.

Finally throughout this initiative, it’s crucial to monitor GenAI’s impact for stakeholders. This tracking and measurement will not only demonstrate its value but also provide insights for future AI experiments. Continued measurement will help identify the most valuable components within your company’s data processes for AI.

GenAI can significantly impact your business. Effective integration from the outset while focusing on the capabilities mentioned, is crucial for success. This proactive approach ensures AI use cases are valuable and functional, avoiding the pitfalls of a “garbage-in, garbage-out” scenario.

Instantly turn any data into ready-to-use products, integrate for AI and analytics, and do it all 10x faster—no coding needed.