Success now hinges on how well businesses share data beyond their walls. Seamless intercompany data flow fuels smarter decisions, faster collaboration, and real growth. In this new era, connection is the key to competitiveness.

This post is part of a five-blog series focused on how businesses can plan and build a data-driven Future. The blogs will walk readers through the basics, fundamental dynamics, and nuances in modern data management and processing. This blog describes 5 approaches to data processing that anyone working with data should consider. In the next four blog posts we will cover: a deep dive into ELT, why data is broken for enterprises, the current state of the data ecosystem, and the future of data with a look into emerging concepts like data fabric and data mesh.

As enterprises double down on data-driven everything, it means that data is being used for analytics, data science, and operations in all aspects of business, be it product, sales, marketing, logistics, or HR. Different applications will generate data in different ways and need to consume data in different ways.

How is Data Processing done today? Part 1 of this series breaks down and goes deep into different ways in which data makes its way to various data driven applications.

The purpose of any application is to serve a user’s needs. An application both consumes and produces data. What is the right data system for such an application – database, files, API, or streams?This is a decision that developers need to make and is based on three key criteria – schema, format, and velocity:

Based on these three criteria, developers choose the right data system whether its database, files, API, streams etc. for the data consumption and production steps.

Data produced by one application or system is often consumed by another application or system. This may be for analytical purposes, data science applications, or operational needs. An example of an operational use is when the data from inventory management systems in a store is used by the order management system.

In order to make data generated by one application usable in another application, two key data processing steps need to take place:

This is where E, T, and L come into play in the context of data processing:

E : Extract or read data from a source system which could be a file, a database, some API, a stream, human input, anything.

T : Transform data means modifying the attributes, schema, format of data. This step can involve computations, joins, enrichment, and in some cases also include filtering, validating, and quality checking data

L : Load data into a target or destination system where you will use it. The target system can be a database, data warehouse, API, SaaS service, file, email, stream, or a spreadsheet which means anywhere it can become ready for consumption by another application.

Now that we understand the three steps of E, T, and L we can construct the five styles of data processing. Each of them is a variation of these three steps

| Style | Velocity | How it works | ||

| Step 1 | Step 2 | Step 3 | ||

| ETL | Batch or Streaming Data Push |

Extract from Databases, Files, and API | Transform schema, format, and attributes | Load into a Database or File |

| ELT | Batch or Streaming Data Push |

Extract from Databases, Files, and APIs | Load (replicate) into Data Warehouse | Transform in Databases from one table to another |

| API Integration | Batch or Streaming Data Push | Read from API | Transform schema, format, and attributes | Push into another API |

| API Proxy | On demand Data Pull |

API call | Transform schema, format, and attributes | Pull into an API |

| Data-as-a-Service | On demand Data Pull |

Read on-demand from Database, DWH or other | Transform schema, format, and attributes | Pull into an API |

. Data Push vs Pull . Data Push vs PullPush and pull are two common modes of data flow. Pull: You request data. It is fetched and delivered instantly. For example you request the status of your Fedex Package. The latest information instantly pulled to tell you where your package is. Push: Data is delivered to you periodically or based on an event/rule. For example when your package is shipped or delivered a text message is sent to notify you. |

You may need to Transform before you load in cases where:

This works great for cases when

API integration is the choice when you are:

Streaming vs Real-time Streaming vs Real-timeWhile fast streaming and real-time may seem similar at the surface, there are some key differences to know when thinking in terms of data velocity and responsiveness. Streaming: Think of this as a queue. Data enters at the back of the queue and is consumed when it is at the head of the queue. From data entering the queue to being consumed can be a snap, but don’t be surprised if it takes longer when the queue is very full (think traffic jam). Even 20-30 seconds can feel like an eternity when you are trying to check the status of your Fedex package or online auction. Real-time: Here data is delivered instantly, typically in single digit or tens of milliseconds. These systems are designed to not use queues or other mechanisms that may have uncertain delays. Of course, improperly designed real-time systems can get slow and give you the same bad experience like waiting in a queue. |

While not traditionally considered as data processing, API Proxy isn’t that much different except data is flowing between APIs and its flow is on-demand, i.e. real-time. This is ideal when

Here the final data consumption happens in an API, and just like API proxy the data is delivered on demand

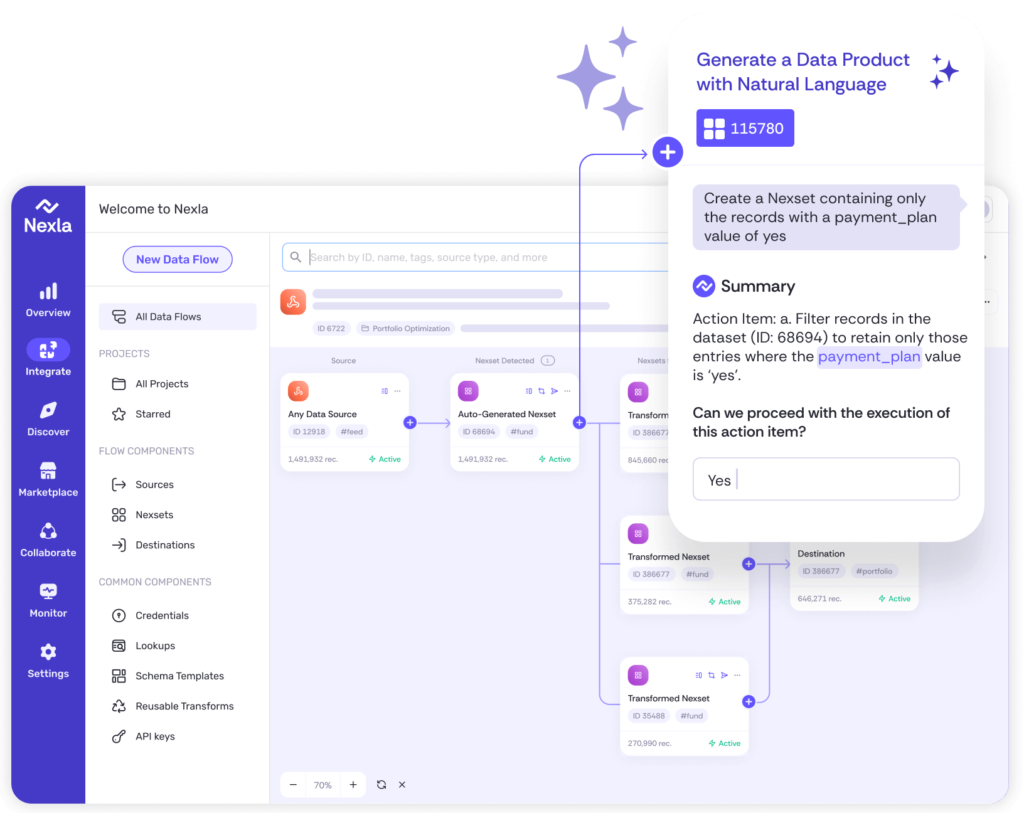

The five styles to data processing essentially address the variations in the requirements around velocity of data flow and the sequence of E, L, and T steps. Nexla was built with a vision of data flow across organizations that is orthogonal to how other companies approach data processing. Our approach elegantly solves the complex problem of addressing every possible variation of data processing with ease of use and simplicity. Nexsets provide a logical view of data that abstracts format, schema, and velocity. The result is a simple yet powerful, converged approach to data processing.

In the next post, I will take a deeper look at ELT. ELT has skyrocketed in popularity and for good reasons. But with increasing popularity, often comes hype where shortcomings get overlooked. Stay tuned to learn about when and how to best leverage ELT.

Instantly turn any data into ready-to-use products, integrate for AI and analytics, and do it all 10x faster—no coding needed.