Success now hinges on how well businesses share data beyond their walls. Seamless intercompany data flow fuels smarter decisions, faster collaboration, and real growth. In this new era, connection is the key to competitiveness.

In this series we interview the leading thinkers in Data Operations to discuss the state of DataOps from their point of view. Learn about what they do, their biggest challenges, and how they are utilizing DataOps to drive their businesses.

We recently spoke with Apoorv Saxena, Product Management lead for Cloud AI & Research at Google. As a product manager, Apoorv brings Google AI to the world through Natural Language Understanding (NLU) products. He’s also the Co-Chair and Co-Founder of the groundbreaking AI Frontiers conference, which returns to the Santa Clara Convention Center, November 3rd-5th, 2017.

Nexla: You head up product management for Cloud AI and Research at Google. Tell us about what you’re building and researching.

Apoorv Saxena: In my current role, I lead AI products in Google Cloud that help you understand human language and written text. This includes our solutions around Google Assistant, Natural Language Understanding Google translation, plus more in the pipeline.

If you think about unstructured data, there are a few pillars. We need to understand video, understand images, and understand written and spoken text. The text part is where I focus. Someone building a chatbot would be our customer. A lot of media companies are using our services for real-time translation, for example.

N: Tell us about the conference you helped start, AI Frontiers. It’s back in Santa Clara this November 3 – 5.

AS: A group of folks back in 2015 were interested in learning about machine learning and AI and its advances. We realized there was not one place where you could learn from the people actually working at the cutting edge of AI research and applying it to solve real world problems. AI is a fast evolving field, so the best people to learn from are the ones publishing relevant research papers and implementing the technology.

It started with my involvement at the Silicon Valley Big Data Association and AI Association. We then expanded to members at universities and big companies, and now we are over 5K+ members. The AI Frontiers conference came out of the goal to bring together practitioners and researchers. The conference speakers are technical and have done something significant. They have published high-impact research in the last two years, or are working on some exciting AI products. That’s the conference: the core value is the meeting of high quality minds working in AI. The message is resonating – people come to the conference to learn from the best in the field. Our efforts are being recognized, because large companies such as Microsoft, Google, Facebook, and Amazon are now sponsors.

There are so many AI conferences that have a commercial aspect. These conferences become more about product pitches than technological conversations. AI Frontiers is very non-commercial. The goal is to make the experience educational, to give our attendees the opportunity to learn from the best. There are only a handful of conferences that can bring the best AI talent in one place, AI Frontiers is one of the few.

N: Google has open-sourced technology related to AI. How does it envision companies using this tech and what types of data do companies need?

AS: We open-sourced AI technology because we believe in the democratization of AI. We believe in a world where companies will make AI pervasive, embedded in all key applications and workflows. In that world, giving developers and engineers access to the best tools and frameworks becomes critical to the wide scale adoption of AI. Just as open-sourced Android helped adoption of mobile technology.

In terms of what types of data companies need, it will be all kinds of data—AI is going to be pervasive. I will focus on the part that I deal with, human-computer interactive data. It can be a company talking to a customer, sending an email, or someone calling in from a call center and giving information, a user using a voice common on their phone, etc. This data comes in so many forms – chat logs, voice commands, call recordings, news feed, web articles, documents in various format etc. Ability to gather, process, train, and predict on such data from multiple sources and in many different formats, while making sure you protect user privacy and conform to relevant regulations is a huge challenge. In this future AI-driven world, data management and data processing is critical to any company’s success

N: How do you think companies will manage the many, many data sets required to build and sustain effective AI and ML

AS: Data, especially high quality training data, is oxygen for any AI system. At Google, we have built a very extensive data processing and training infrastructure to train our AI systems. I believe as companies start to ramp up on their AI investments, investing in such an infrastructure is going to be key to long term success. But not all data is the same. You need to first start at data triaging and identifying the sources of data. Which sources are valuable and which are less valuable from a training and predictive value perspective? When we work with large clients, up to 70-80% of the time is spent on finding the right data, setting up the pipelines, cleaning, and annotating data.

The next question is what is the freshness of the data and how often is it updated? That’s important. It’s important to manage data, triage data, provide access control, and keep things updated as schemas change. Another key piece is data annotation, especially for supervised learning use cases. This, combined with data validation and transformation on a continuous basis, are key investments most companies need to make in order to be successful in AI. If you can’t get your data right, you can’t get your algorithm right.

N: Do you think data inherently wants to be moving or should it be nicely organized in a data warehouse where people can have access to it?

AS: The answer depends on what kind of data you deal with and what you use the data for. For a lot of the use cases that our customers and I work on, we deal with real-time unstructured data. When we use this data for training, we source such a data directly from the block storage, not necessarily from a data warehouse. Predictive data mostly comes in from of streaming data directly from the application.

N: How does Data Operations work at Google?

AS: We have a huge data operations and infrastructure that services our Ads business. But I will focus on data operations for our Cloud AI systems. Most of the data infrastructure and operations in this group is built / run by a mix of data scientists and data/infrastructure engineers.

When it comes to AI systems, the data scientists are still involved in designing and managing our pipelines and data processing. For example, a lot of the training example validation vocabulary generation tools are still written and maintained by data scientists today. However, I believe as the sophistication of such tools increases and the industry standardizes around a few AI frameworks, data scientists will be less involved going forward.

N: What has been your biggest data challenge related to NLU goals so far?

AS: NLU is still a fast-evolving field. On the algorithm side, deep learning is only recently beginning to show similar progress for NLP that we saw in image and speech processing. The biggest challenge around data is getting good training data in multiple languages. Our model is only as good as our training data.

You can map any breakthrough in NLP tied to what high quality datasets were available. We saw this in translation, and we are seeing this in question and answer systems now. Also, you can’t have data in just one language, you have to have high quality data in many languages. The complexity of a task in many languages increases by 15-20x based on the number of languages.

N: Prediction time! Ten years ago, “the cloud” was born. As you look into your crystal ball, what will be the biggest changes to data in the next 10 years?

AS: In the next 10 years, I think we will get more more sophisticated in how data is valued and priced. Right now, it is pretty early in how we price training data. This is due to either lack of good tools to mine/extract value from data or lack of good high value use cases that use that data. What is valued highly today might not hold value tomorrow because of some of the advances in algorithm and data labeling. For example, cost of training data for supervised learning might come down significantly as new algorithms that do not require a lot of training data become more mainstream.

However, some form of data will retain value and even increase in value over time. Data that is proprietary— such as health records and weather data— or adds to human knowledge and context understanding, will be valued even more as we come up with new ways to monetize it.

Learn more at the AI Frontiers conference November 3 – 5, 2017.

Can’t get enough DataOps? Check out our first interview here.

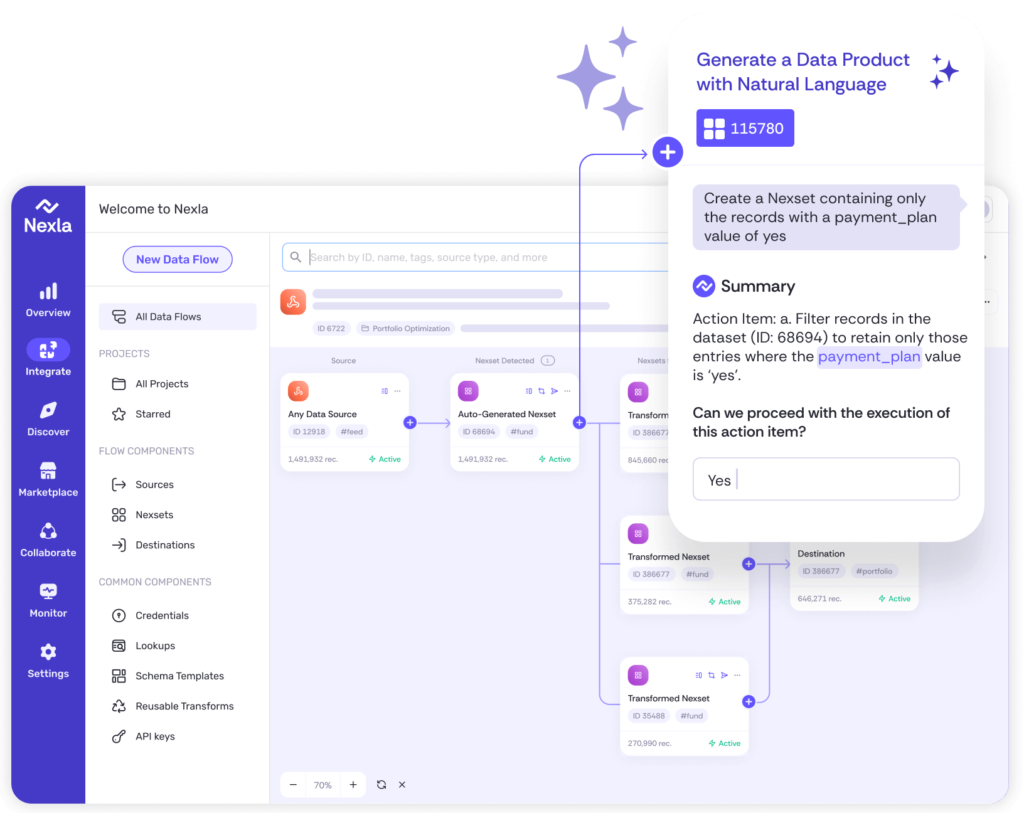

Instantly turn any data into ready-to-use products, integrate for AI and analytics, and do it all 10x faster—no coding needed.