Success now hinges on how well businesses share data beyond their walls. Seamless intercompany data flow fuels smarter decisions, faster collaboration, and real growth. In this new era, connection is the key to competitiveness.

Teradata has been a popular on-premise database system for decades, catering to enterprises who want to manage and analyze large volumes of data. But the advent of Snowflake, a modern cloud computing data warehouse, has opened up modern data analytics capabilities. For example, Snowflake’s multi-cluster cloud infrastructure that separates compute from storage enables enterprises to automatically and instantly scale their infrastructure.

It is no surprise that a lot of enterprises have been evaluating using Snowflake in their modern applications. However, the transition from Teradata to Snowflake is not an easy one click switch. Invariably an enterprise would have built ETL pipelines to push data to Teradata, customized visualization tools to pull data out of Teradata, designed client applications dependant closely on data from Teradata, and built tooling around Teradata. With this whole ecosystem to consider, migration from Teradata to Snowflake might seem a daunting task.

But that’s where Nexla comes in! Follow along this step-by-step migration guide to execute a successful migration strategy, including special tips for automation using Nexla’s Teradata Exporter.

Migrations are usually complicated and can be a mess if not planned and executed properly. We recommend a carefully thought out step by step approach to migrating your data from Teradata to Snowflake.

Start with analyzing your current Teradata implementation to get a full 360 view of your Teradata ecosystem. This involves finding answers to the following questions:

Document your answers and use this list to evaluate the level of support for these inputs and outputs in Snowflake. Additionally, use this list as an input to the migration QA process.

Now that you are done with understanding and documenting your current Teradata usage, let’s lay out an execution plan for the migration.

Instead of an all-in-one-go approach to migration, we recommend a phased approach. Migrate low impact tables, databases, and applications first, get some success under the belt, and then move-on to the complex migration tasks.

No matter which approach you take, by the end of this step you will have a full plan of action for the migration in place.

With your plan-of-action in hand, let’s get started executing the migration. First up – you want to set up your Snowflake account to meet your needs.

Use the Snowflake UI / CLI to configure the following on Snowflake:

Now that you have primed Snowflake with accounts and databases, you can start creating the database structure. This typically involves using DDL exports from Teradata, converting them to Snowflake compatible DDL and executing it. The table below provides a mapping between Teradata and Snowflake column types.

However, an intelligent DataOps platform like Nexla can handle this automatically, saving you a tedious and potentially error prone process. With Nexla you just need to select which Teradata tables and data-fields need to be migrated to Snowflake. Nexla will automatically create Snowflake DDL compliant Snowflake tables and handle the DDL migration based on the data coming from your Teradata database. Additionally, Nexla will automatically skip Teradata system tables like DBC, TD_SYSGPL, TD_SYSXML, etc during migration.

| Teradata Type | Snowflake Type | Comments |

|---|---|---|

| A1 | ARRAY | |

| AN | ARRAY | |

| AT | TIME | |

| BF | BINARY | |

| BO | BINARY | BLOB data limited to 8 MB on Snowflake |

| BV | BINARY | |

| CF | VARCHAR | |

| CO | VARCHAR | CLOB data can be stored as varchar. Limited to 16 MB on Snowflake |

| CV | VARCHAR | |

| D | NUMBER | |

| DA | DATE | |

| INTERVAL TYPES (DH, DM, DS,DY,HM,HR,HS,MI,MO,MS,YM,YR) | N/A | Not supported in Snowflake. Can be supported as a number and calculations can be performed using date functions. |

| DT | N/A | Not supported in Snowflake |

| F | FLOAT | |

| INTEGER TYPES (I, I1,I2,I8) | NUMBER | |

| JN | VARIANT | |

| LF, LV | N/A | Not supported in Snowflake |

| N | NUMBER | |

| PD | N/A | Separate into two date fields |

| PM | N/A | Separate into two fields (TIMESTAMP_TZ) |

| PS | N/A | Separate into two timestamp fields (TIMESTAMP_NTZ) |

| PT | N/A | Separate into two time fields |

| PZ | N/A | Separate into two time fields |

| SZ | TIMESTAMP_TZ | |

| TS | TIMESTAMP_NTZ | |

| UF/UV | N/A | Not Supported in Snowflake |

| UDT | N/A | Not Supported in Snowflake |

| XM | VARIANT |

After Step 4 your Snowflake table should be ready to handle data from Teradata. Now you can load data from Teradata into Snowflake!

If you are running Teradata is running on prem, it is advisable to push data first to a cloud storage like AWS S3 or Azure DataBox before loading into Snowflake.

This step is another reason for considering an intelligent DataOps platform like Nexla. Instead of writing scripts for performing this one time data load, with Nexla all you need to do is trigger a job for Nexla’s custom Teradata Exporter. The exporter will automatically extract data from Teradata, load it in into an intermediate cloud storage if needed, and subsequently load the data into Snowflake.

In Step 5, we replicated all historical data from Teradata into Snowflake. In order to decommission Teradata completely, we now need to connect Snowflake directly with the sources of data that are writing into Teradata.

We recommend connecting each source of data to Snowflake while it is still writing to Teradata. This will allow you to test applications in parallel and ensure seamless transition once you disconnect the source to Teradata link.

Snowflake has JDBC drivers which can be used to load data programmatically into Snowflake. But instead of writing custom connection scripts between your sources and Snowflake, we recommend trying out Nexla. You can use Nexla to load data into Snowflake from virtually any source. Nexla supports no code real-time integrations from files, databases, API’s, message buses, emails, into Snowflake and can significantly reduce time to market for ongoing data loads into Snowflake.

Check out all the benefits of using Nexla for writing Snowflake here: https://nexla.com/snowflake/

Once you have ensured that all your data is now being written into Snowflake, start tuning all your applications that were dependant on Teradata to read data from Snowflake. We recommend running two parallel versions of each application during the migration – one which continues consuming data from Teradata and another that is based on data from Snowflake.

Customizing scripts and applications can be complicated and might require significant re-engineering. Yet another reason for considering a DataOps platform like Nexla for building automated pipelines! Nexla enables self-serve, no-code, real-time data extraction from Snowflake to virtually any destination type – file, databases, APIs, message buses, etc.

Snowflake consumption patterns using Nexla look like the diagram below. With Nexla’s unique data flow branching model data from your Snowflake tables need only be read once even if multiple applications need different slices of data from those tables.

Once you have configured parallel end-to-end stacks incorporating data flowing in and out of Snowflake and Teradata respectively, a comprehensive QA cycle should be run to compare functionality across two systems. In addition to validating the applications, you should compare row counts, sums of numeric fields, and hashes of string fields to identify discrepancies.

This step can be easily automated with Nexla. You can configure Nexla data flows which write data based on query results from Teradata and Snowflake into a file. This file can serve as a comparator between two systems.

We recommend doing validations in two phases:

In the initial phase you continue with the Teradata stack as primary and Snowflake as secondary. Run this phase for a short period of time. During this period run the comparator as a scheduled process to monitor for differences. If no differences are found, you can move on to the next phase of validations.

In this phase we flip over the stacks and make Snowflake as primary data system and Teradata as secondary. Just like the previous validation phase, run the comparator as a scheduled process to monitor for differences. If no differences are found Teradata system can be decommissioned.

If you chose the phased migration approach in Step 2, repeat steps 3-8 for each phase of your migration plan before moving on to Step 9.

Finally we are done with migration! Now that all the applications are running on Snowflake, inform all your Teradata users about their new Snowflake accounts and other changes. Turn off all Teradata load processes and revoke access to Teradata.

That was a comprehensive step-by-step plan for migrating from Teradata to Snowflake designed to avoid any hiccups pre or post migration. As we saw, a big chunk of the migration process can be achieved more quickly and reliably with an intelligent, modern DataOps platform like Nexla.

Fast-track your migration and avoid common pitfalls – signup at https://nexla.com/start-free-trial/ or contact us at support@nexla.com for more information!

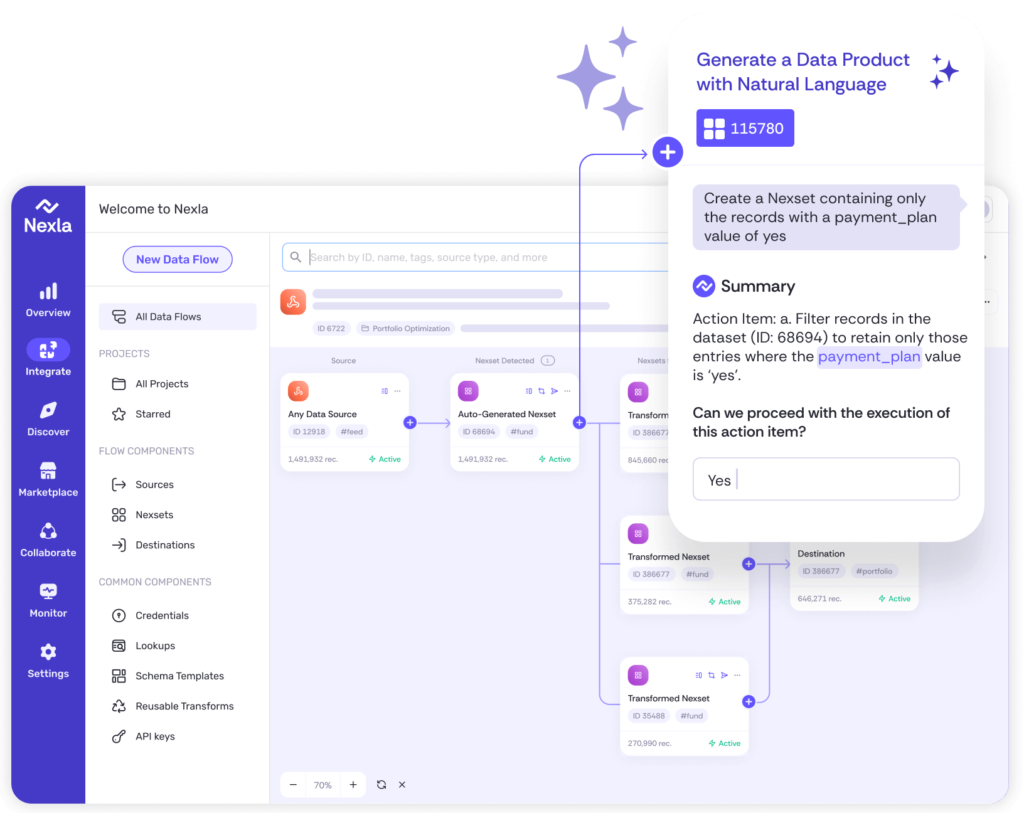

Instantly turn any data into ready-to-use products, integrate for AI and analytics, and do it all 10x faster—no coding needed.