Success now hinges on how well businesses share data beyond their walls. Seamless intercompany data flow fuels smarter decisions, faster collaboration, and real growth. In this new era, connection is the key to competitiveness.

What is Stream Processing?

Stream processing is the processing of event data in real-time or near real-time. The goal is to continuously take inputs of data (or “event”) streams, and immediately process (or transform) the data into streams of outputs. The input is typically one or more event streams of data “in motion” containing information such as social media activity, emails, IoT sensor data from physical assets (e.g. self-driving cars), insurance claims, customer orders, bank deposits/withdrawals, and even emails. The types of streaming data are endless.

What does Stream Processing do?

Stream processing is processing data as it is made available. It doesn’t wait for certain time boundaries to process data which is typical of batch processing. Before stream processing came along, batch processing would save a range of data as a file, send it to a database, or to an even bigger form of mass data storage such as a data lake. In order to pull value from it, engineers would have to select the data they wanted, clean and prepare it, and run applications to query the data. Batch processing requires events to be collected and stored before queries can be run.

One of the biggest disadvantages of batch processing is that a lot of data is structured inherently as streams of events. With batch processing, the data is converted from continuous streams of events to events from certain points in time. An engineer will have to process data from a specific range of time, unlike stream processing where data is continuously processed.

For example, let’s look at streams of Twitter data. For every tweet, you have a timestamp, retweets, time of retweet, likes, impressions, etc. On average, there are 350,000 tweets sent per minute. Now, let’s say an engineer wants to work with and analyze this data, but can only do it in batches. At some point, the engineer will need to decide what the batch boundaries are, query it, and repeat. After repeating several times, the engineer will then have to congregate the data in order to get an overall picture of it.

With stream processing, tweet data is processed as it comes in. Since all data is processed, it can be viewed cohesively at at any given time, in its most updated form possible. With stream processing, mundane engineering tasks like traditional ETL processes to integrate data can be relieved. Stream processing can filter and enrich data as it comes in. With the Twitter data example, conditions such as a specific number of retweets can be applied to the Twitter event stream data so only meaningful data (tweets with the specific amount of retweets) is left for analysis.

The biggest advantage of stream processing is that insights are provided in or near real-time. If you work with data, you know the most important part is the value (or insights) it provides. Though batch processing can be quick, it still is a point in time snapshot. You might have to wait for the next batch run for the most recent data to be reflected. With stream processing, the most recent data is reflected almost immediately.

A Stream Processing Infrastructure for Data Integration

When you break it down, the primary function of any stream processing infrastructure is to ensure data flows from input to output efficiently in real-time or near real-time.

According to Gartner’s Market Guide for Event Stream Processing, the focus of a stream data integration event stream processor such as Nexla, is “ingestion and processing of data sources targeting real-time extraction, transformation and loading (ETL) and data integration use cases. The products filter and enrich the data, and optionally calculate time-windowed aggregations before storing the results in a database, file system or some other store such as an event broker. Analytics, dashboards and alerts are a secondary concern.”

Things to look for in a strong stream processing infrastructure for data integration:

Who is Stream Processing for?

More and more, companies across many sectors are beginning to adopt stream processing as a way to accelerate time to insight of data. Stream processing is an ideal fit for anyone who is working with any type of real-time or near real-time data. This includes data about customer orders, insurance claims, bank deposits/withdrawals, tweets, Facebook postings, emails, financial or other markets, or sensor data from physical assets such as vehicles, mobile devices or machines across sectors like retail, healthcare, banking, or IoT.

Along those lines, stream processing is also beneficial for companies who find their engineering teams are stretched thin with tasks to process data. 58% of companies are using real-time streaming today. More than half of that 58% say at least 50% of their data is currently processed via real-time streaming. Couple that with the 63% of companies that say their real-time streaming will increase within the next year, it’s safe to say that an engineer’s job to maintain a strong stream processing infrastructure is becoming more critical than ever before.

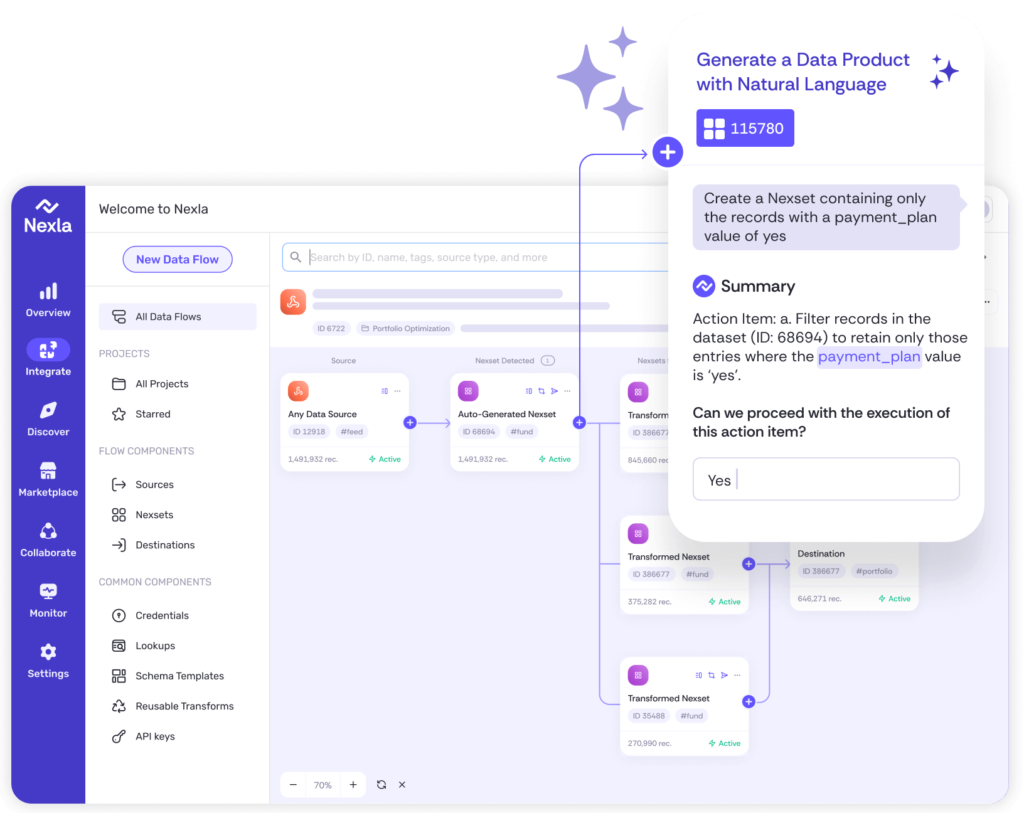

Stream processing is also made for those who want faster access to the real-time data they need. Recently recognized as a Representative Vendor by Gartner, Nexla provides event stream data integration solutions for real-time streaming data. The Nexla data operations platform has an intuitive interface that allows analysts and business users to easily build data flows they need without disturbing engineering. Nexla provides self-service tools for data mapping, validation, error isolation, and data enrichment. Collaborative workflows are now possible for real-time data.

Want to learn more about if stream processing is right for you? We’d love to help! Chat with us here.

Instantly turn any data into ready-to-use products, integrate for AI and analytics, and do it all 10x faster—no coding needed.