From Hallucinations to Trust: Context Engineering for Enterprise AI

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

The “right” data rarely lives in one system in enterprise environments. It arrives as raw feeds from operational apps, vendors, and event streams. Without shared definitions, quality rules, and lineage, each consumer rebuilds a slightly different version of the truth.

When teams try to scale generative AI on top of that, the failure mode is predictable. Outputs sound confident, but the underlying context is inconsistent, unvalidated, and hard to trace. That is one reason Gartner’s 2024 forecast shows 30% of GenAI projects to be abandoned after proof of concept, often tied to data quality and risk controls that do not survive production.

Metadata-intelligent integration addresses those gaps by continuously generating and operationalizing the context that makes feeds reusable. Schema and semantics, field definitions, validation rules, lineage, version history, and governed delivery become part of the integration output.

This blog lays out a metadata-first blueprint for turning raw feeds into reusable data products, then shows how Nexla operationalizes that blueprint end to end.

Raw feeds stay raw because most data “integration” delivers rows, not usable meaning. The data lands, but the operational context required to reuse it does not.

dbt Labs’ State of Analytics Engineering reports that 55% of respondents spend their time maintaining or organizing data sets. More than half rank it as their number one task.

One of the reasons this happens is that feeds arrive without reusable context, so every team repeats the same interpretation and cleanup work. You may get column names and data types, but not the details that prevent misreadings.

For example, a feed has a field called status. One team treats status=”closed” as “resolved,” another treats it as “no longer active,” and a third excludes it because they assume it includes cancellations. None of that logic is in the feed. It lives in dashboards and SQL snippets.

Without this context, every team has to re-derive definitions, joins, and quality rules before they can trust the feed. The same cleanup work gets repeated in multiple pipelines, and each team hard-codes its own assumptions into one-off transformations.

When the source system changes, the definitions and rules that downstream teams inferred can become outdated. The ingestion job may still complete, but the output is no longer consistent with the original meaning, so reports and models start producing different results.

If there is no contract to signal what changed and no lineage to show which assets depend on the changed field, teams have to trace the issue manually across multiple pipelines. That slows diagnosis, increases duplicated fixes, and prevents the feed from becoming a stable, reusable data product.

Metadata intelligence fixes the “data moves, but meaning does not” pattern by making context a first-class output of integration and keeping it current as sources change.

Instead of treating metadata as static documentation, metadata-intelligent integration continuously discovers, infers, and maintains the definitions, quality expectations, and traceability that downstream teams depend on. Field semantics and data validation logic travel with the feed, instead of being buried in one-off SQL or undocumented team knowledge.

In practice, this shows up in four capabilities in the integration layer:

When those capabilities are present, the output becomes a reusable data product. It is a dataset packaged with a clear contract that includes:

With that contract in place, every consumer works from the same shared understanding. Teams can query the product or integrate it into applications without rediscovering what fields mean, rebuilding quality checks, or tracing dependencies by hand.

The goal is to turn changing source feeds into data products that teams can reuse. A “data product” here is a dataset plus a published contract, stored in a catalog or registry, that contains definitions, checks, lineage, and access policy in a form teams can query and enforce.

This blueprint treats integration as an end-to-end workflow, where each step prevents common issues seen with raw feeds, including missing definitions, late discovery of source changes, and slow root-cause analysis.

Ingest through managed connectors and APIs that handle authentication, incremental pulls, retries, and source-side changes like renamed fields or new endpoints.

This avoids one-off scripts that break when a vendor updates an

Profile the incoming data on each load and keep the results versioned. Track schema, data types, null rates, value ranges, key candidates, and relationship patterns. Compare the latest load to prior loads to detect changes. Doing so lets you catch changes as they happen, such as a new enum value, a widened type, or a spike in missing values.

Teams see the change before it shows up as a metric shift. Treat each change as either non-breaking, which you can roll forward automatically, or breaking, which should trigger a new version or a required consumer update.

Map the raw feed into stable business entities, such as customer, order, claim, ticket, or device. Normalize naming and formats, set the grain explicitly, and define join keys.

Attach documentation metadata that people actually use, including field descriptions, ownership, tags, and approved usage notes. The output should answer common questions directly, such as “Is this net or gross?” or “Which timestamp is the event time?”

This step allows teams to reuse the same entities and fields without re-deciding what each column means, instead of relying on guesswork.

Write data checks as code and run them on every load. Cover schema, freshness, value ranges, referential integrity, and reconciliations to known totals.

Use the results to control what gets published. If a check fails, quarantine the affected records or block the load, then notify the owner. Where possible, trigger an automated fix.

Make ownership and timing explicit. For each check, name a primary owner, a backup, and an escalation route. Define what should normally happen when a threshold is breached.

For example, review the alert on the same working day and decide whether to fix, roll back, or apply a temporary control. Where it is safe to do so, agree on automated responses so issues are not left unattended if people are unavailable.

Doing so removes cleanup from dashboards, and data quality issues are caught at ingestion, where they are cheapest to diagnose and correct.

Track lineage from source fields to output fields and store versions of the product as it changes. Enforce access controls with role-based permissions and keep audit logs.

This makes change investigation faster. When a metric changes, teams can trace which upstream field changed, which transforms were applied, and which downstream tables and reports depend on it.

Publish the product through stable interfaces such as warehouse tables, APIs, files, or streams. Deliver it to the destinations teams already use, such as a warehouse, lakehouse, BI tool, reverse ETL system, or downstream service.

Start from one canonical version of the product and use the same contract to generate each delivery form. That way, the warehouse table, API response, and file export reflect the same definitions, checks, and joins.

As a result, teams can consume a single product rather than five different copies with five different rules, and updates remain consistent over time.

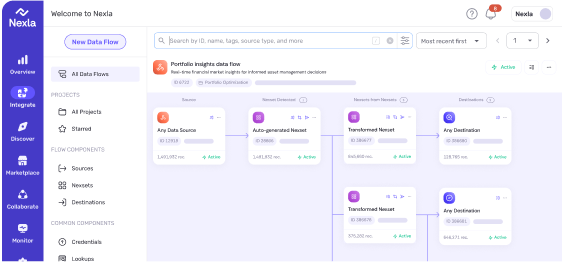

Nexla implements the blueprint by turning each source into a Nexset, which is a human-readable virtual data product.

A Nexset encapsulates schema, sample data, validation, error management, audit logs, and access control behind a consistent interface, so the “product boundary” is where the operational context lives.

Connect to source systems through Nexla’s universal bidirectional connectors or direct REST and SOAP API integration, so onboarding does not assume the source will stay static. Connector setup is configuration-driven, and Nexla uses AI to help connect, extract metadata, and configure the connector.

Once the source is connected, Nexla can automatically create a data product (Nexset) from the source’s raw data and metadata. This establishes a consistent product boundary from the first ingestion step.

For API-based sources, Nexla’s no-code API integration supports multi-level iteration, chained API calls, advanced pagination, and policies such as rate limiting, with throughput optimization. Nexla also enables configuring multi-step API responses by chaining calls “without manual loops,” and saving those rules to check an API for new data on a configured interval.

Users can leverage Nexla’s continuous metadata intelligence to keep a Nexset’s logical view current as sources change. During each ingestion cycle, Nexla analyzes incoming data and compares the latest structure against the existing Nexset schema to detect schema changes.

When a change is detected, Nexla handles it based on impact. Small, non-breaking updates can extend the existing Nexset schema, such as adding a new attribute. For larger changes, Nexla can create a new detected Nexset (reversioning) instead of silently changing the existing interface.

Nexla logs these changes and can send schema change notifications through in-app alerts, email, Slack, or webhooks, so teams can review what changed before consumers are affected. Version history is tracked across connectors, Nexsets, transforms, and flows, so teams can see what changed and when without having to reconstruct the timeline after an incident.

Teams can standardize raw feeds into reusable entities at the Nexset level. Nexla enables them to apply transforms, joins, enrichment, and filters so that inconsistent source layouts become a consistent business-facing model.

This shaping work happens in the Nexset rule panel, where teams apply transformation logic, default values, record filters, validations, annotations, and custom transform code when needed.

Nexla also keeps meaning attached to the product by supporting documentation-ready metadata on the Nexset. Teams can add descriptions and other product information in the Nexset view. Hence, consumers see definitions that use the data rather than reverse-engineer field intent from SQL or dashboards.

Nexla enables teams to apply validation rules at the Nexset boundary to monitor for data errors and schema changes as data moves through ingestion and operations. When a data error is detected, Nexla automatically corrects it or quarantines the affected records. Schema changes can be propagated downstream, and every change is captured in audit trails.

The platform also supports operational notifications so teams see issues when they occur. For example, schema change detection includes Slack notifications via a configured Slack webhook. Nexla also supports dedicated notification types for schema changes, allowing teams to review updates before they affect consumers.

With Nexla, teams can keep traceability and access control attached to the Nexset through lineage and audit trails. It also provides change tracking and version history, so teams can see what changed, who changed it, and when.

Nexla also enforces governance controls at the same boundary. It can detect and tag PII, apply masking and hashing rules, and manage sharing through marketplace approvals so access is granted deliberately and remains auditable.

Lastly, users can publish governed Nexsets through its private marketplace, so consumers can discover data products and request access instead of rebuilding pipelines.

Nexla also delivers the same canonical Nexset to the destinations teams already use. Its converged integration model supports connectors and APIs across multiple delivery styles, so the product can be delivered as tables, APIs, files, or streams from the same Nexset.

Stop treating feeds as extracts and start treating them as products.

Pick one high-value feed that multiple teams rely on and define the “well-described” contract. Specify what each field means, which checks must pass, and what lineage and version history you expect to retain.

Standardize the feed into a governed Nexset that stays current as the source changes, with documentation-ready metadata and quality enforcement built in. Then publish it for reuse and deliver it through the integration styles your teams already use, so the next consumer reuses the same product instead of rebuilding the pipeline.

Request a demo to see how Nexla turns raw feeds into governed data products with automated metadata intelligence, eliminating rework, enabling reuse, and making your data GenAI-ready from day one.

A reusable data product—what Nexla calls a Nexset—is a dataset packaged with a published contract that includes metadata (schema, types, keys), semantics (field definitions), validation rules, lineage tracking, and access controls. Teams can consume it without rediscovering meanings, rebuilding quality checks, or tracing dependencies manually.

Raw feeds deliver rows without operational context. Missing field definitions, quality rules, and lineage force each team to rebuild the same interpretation and cleanup work. When sources change, teams manually trace impacts across pipelines, leading to duplicated fixes and inconsistent results.

Metadata-intelligent integration continuously discovers, infers, and maintains definitions, quality expectations, and traceability as feeds change. Schema, semantics, validation logic, and lineage travel with the data automatically, preventing the “data moves but meaning doesn’t” problem.

GenAI requires consistent, validated context. Metadata intelligence ensures AI retrieves data with verified schemas, unified definitions, quality controls, and lineage tracking. This prevents hallucinations caused by inconsistent, unvalidated, or untraceable data—a key reason 30% of GenAI projects fail.

The six steps are: (1) Intake through managed connectors that handle changes, (2) Learn by inferring structure and detecting drift, (3) Shape into stable business entities, (4) Validate with automated contracts on every load, (5) Govern with lineage and versioning, and (6) Publish to multiple destinations.

Raw feeds deliver data without context—no field definitions, quality rules, or lineage. Data products add published contracts with metadata, semantics, validation, and governance, enabling reuse across teams without rebuilding pipelines or rediscovering meanings.

Data contracts define schemas, validation rules, and change policies upfront. When sources change, the contract detects drift, flags breaking changes, and prevents silent failures—eliminating the manual tracing and duplicated fixes that consume 55% of data engineering time.

Context engineering is the systematic practice of designing and controlling the information AI models consume at runtime, ensuring outputs are accurate, auditable, and compliant.

Essential checklist for validating AI-ready data before building LLM pipelines. Learn the 10 critical steps ML teams must follow to ensure quality, freshness, and compliance.

AI is shifting data engineering from code-heavy ETL to prompt-driven pipelines. Explore where LLMs fit, common pitfalls, and how Nexla makes AI-ready data workflows practical.